STAR Method Interview Examples: 30+ Real Answers (2026)

.webp)

When you Google "STAR method interview examples," you're probably tired of freezing when someone asks "Tell me about a time when..." You need concrete answers, not theory. You need to see what good actually looks like.

This guide gives you both. You'll walk away with 30+ realistic STAR examples you can adapt right now, plus a story bank system that works for any interview.

Here's what you're getting:

Why STAR works (from first principles, not buzzwords)

A story bank template you'll actually use (8 to 10 stories that cover 80% of questions)

30+ detailed STAR examples across different roles and scenarios

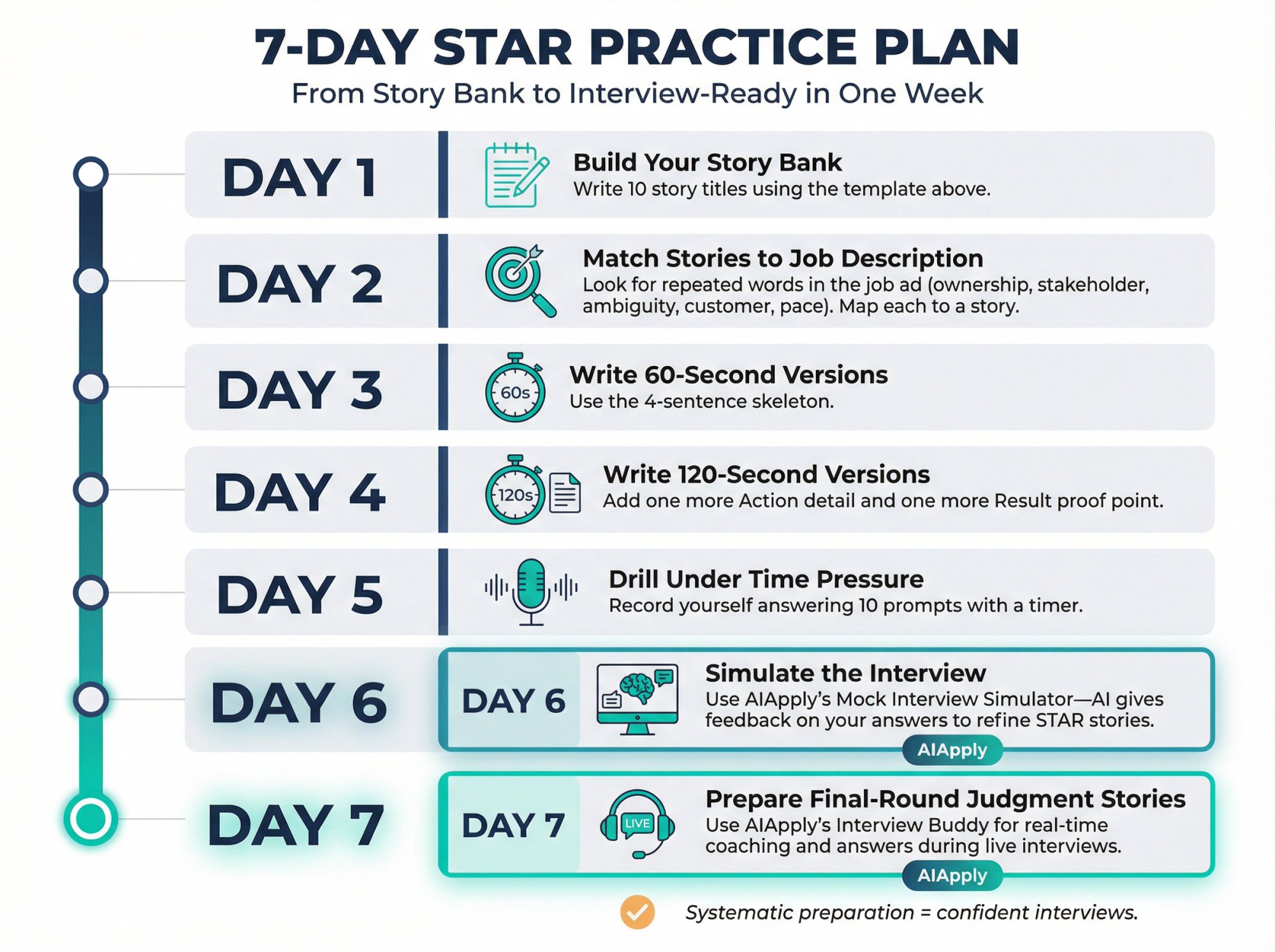

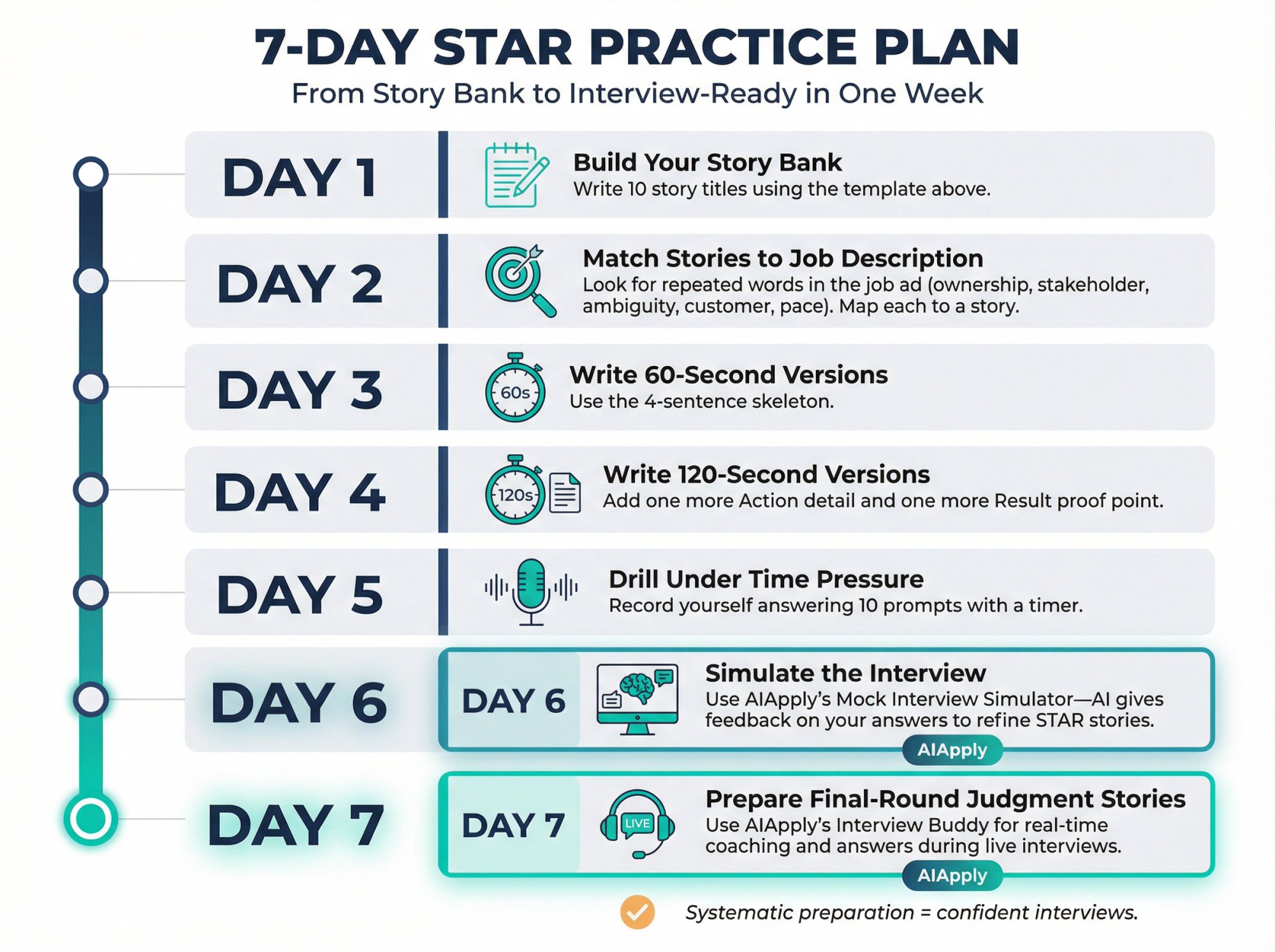

A 7-day practice plan using AIApply's Mock Interview Simulator

The job market in 2026 is more automated than ever. A 2025 systematic review in F1000Research examined factors affecting interview validity and found that structure matters enormously. Being clear, structured, and evidence-based matters more than it used to.

AI tools can help you prepare systematically. AIApply's platform combines resume optimization, mock interview practice, and real-time coaching to give you an end-to-end interview prep system.

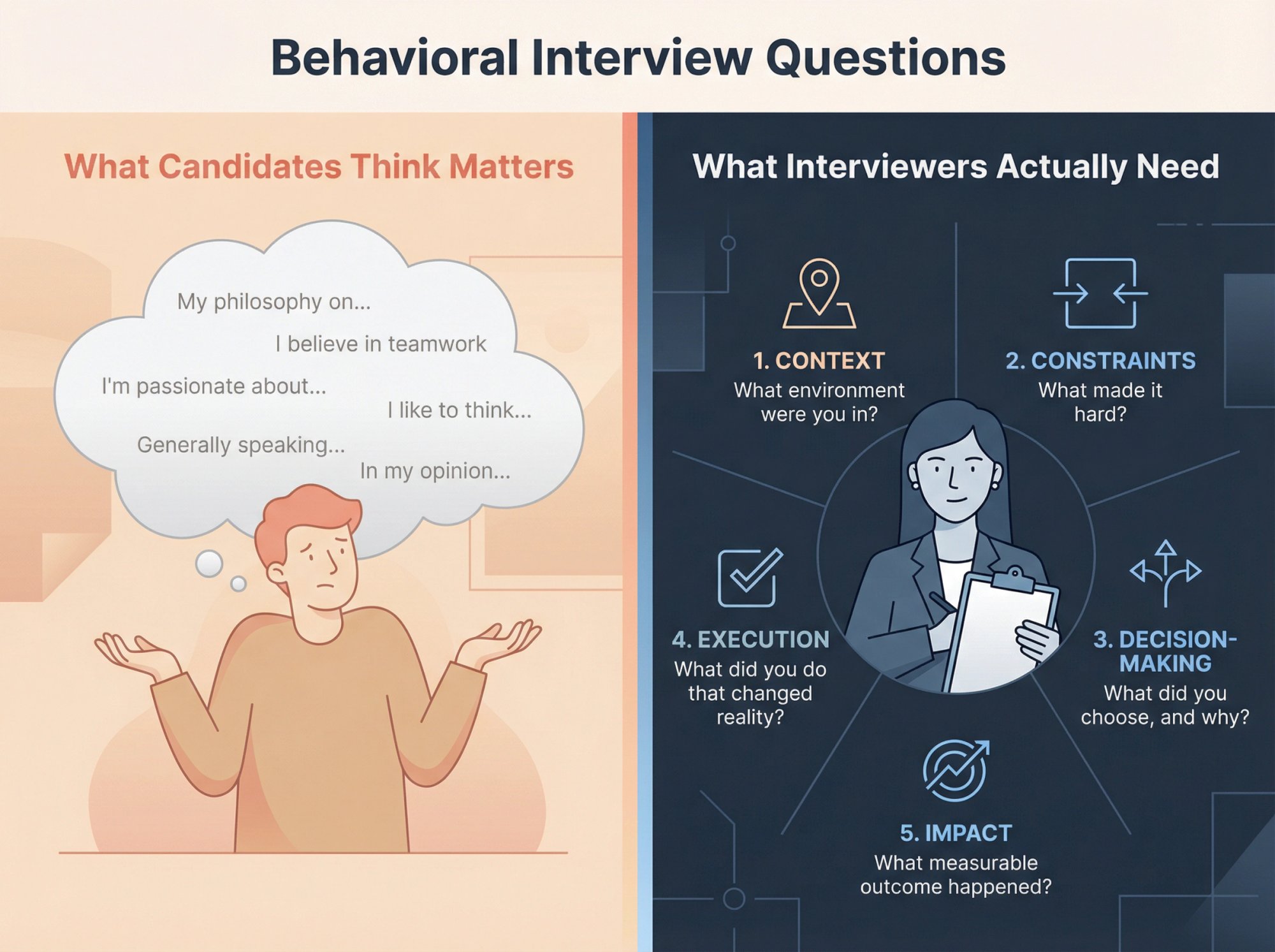

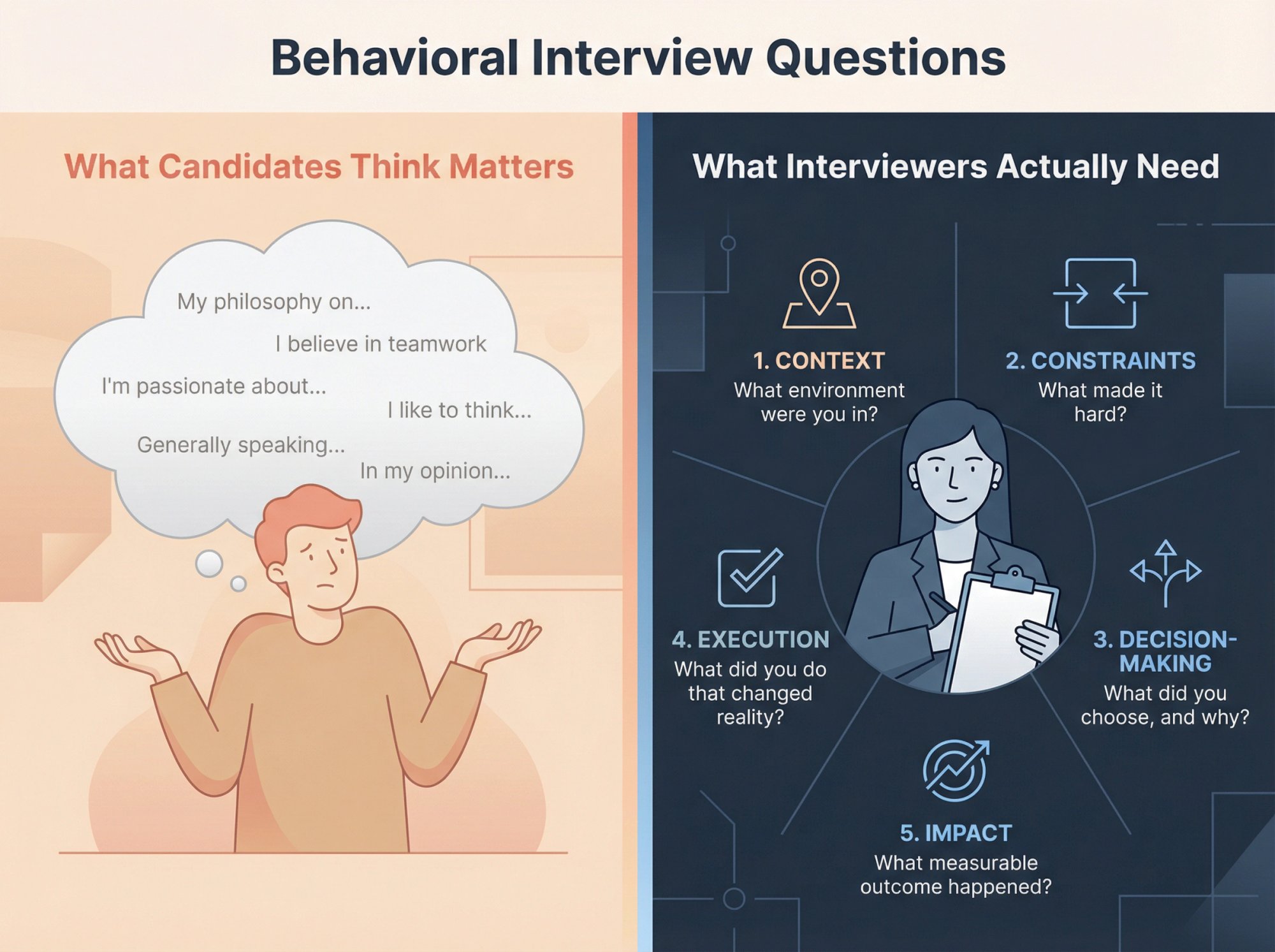

What Do Interviewers Look for in Behavioral Questions?

When someone asks "Tell me about a time you handled conflict," they're not looking for your philosophy on workplace harmony. They want evidence.

Behavioral questions test whether you can extract the signal from the noise of your own experience. Interviewers are trying to see:

• Context (what kind of environment were you operating in?)

• Constraints (what made it hard?)

• Decision-making (what did you choose, and why?)

• Execution (what did you do that changed reality?)

• Impact (what measurable outcome happened because of you?)

A 2025 systematic review in F1000Research examined factors affecting interview validity and found that structure matters enormously. Unstructured interviews are notoriously unreliable. Companies use behavioral questions because they want consistent, comparable signals across candidates.

This is why "structured interviews" exist. It's also why learning STAR isn't optional anymore.

What Is the STAR Method and How Does It Work?

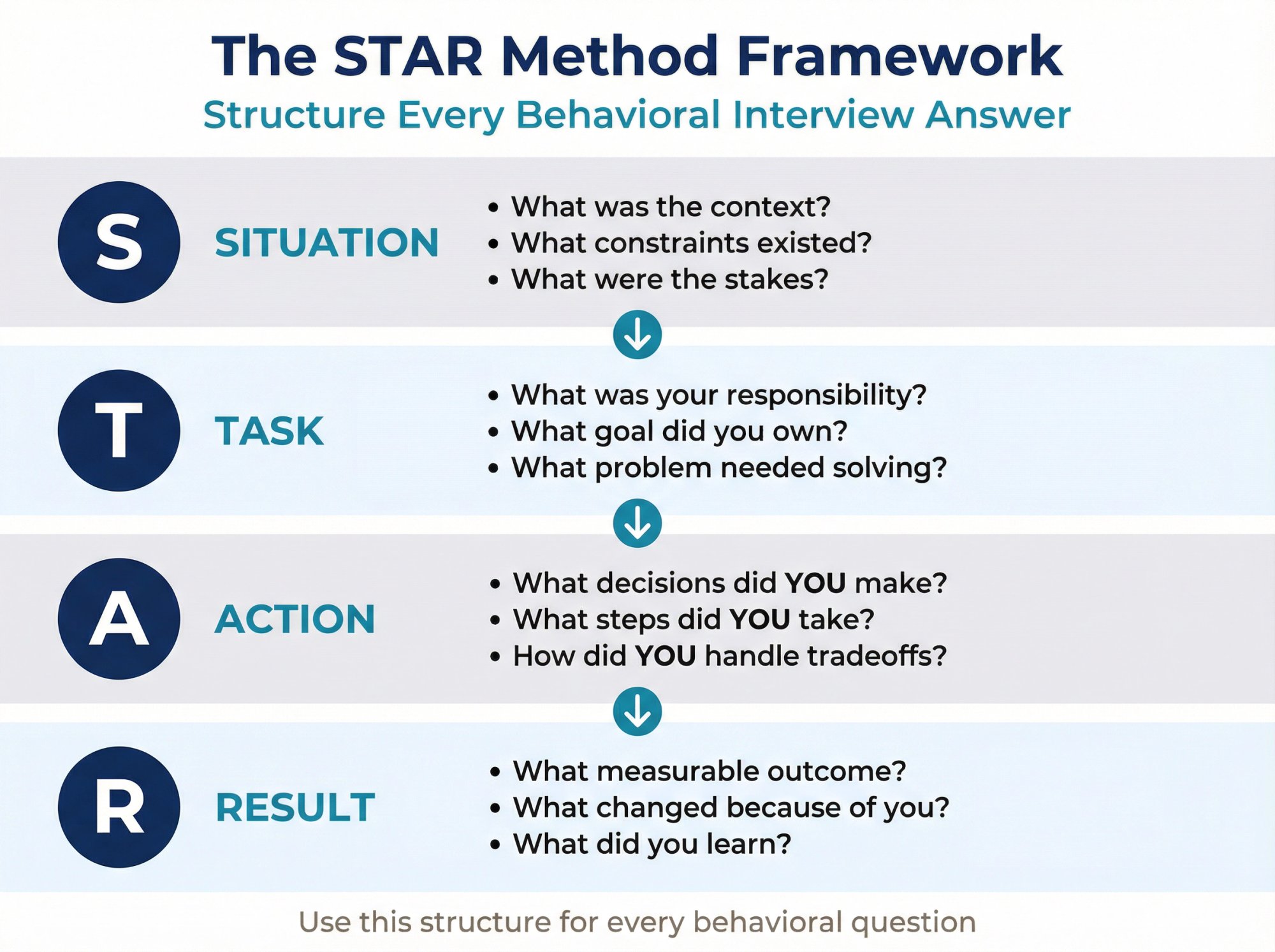

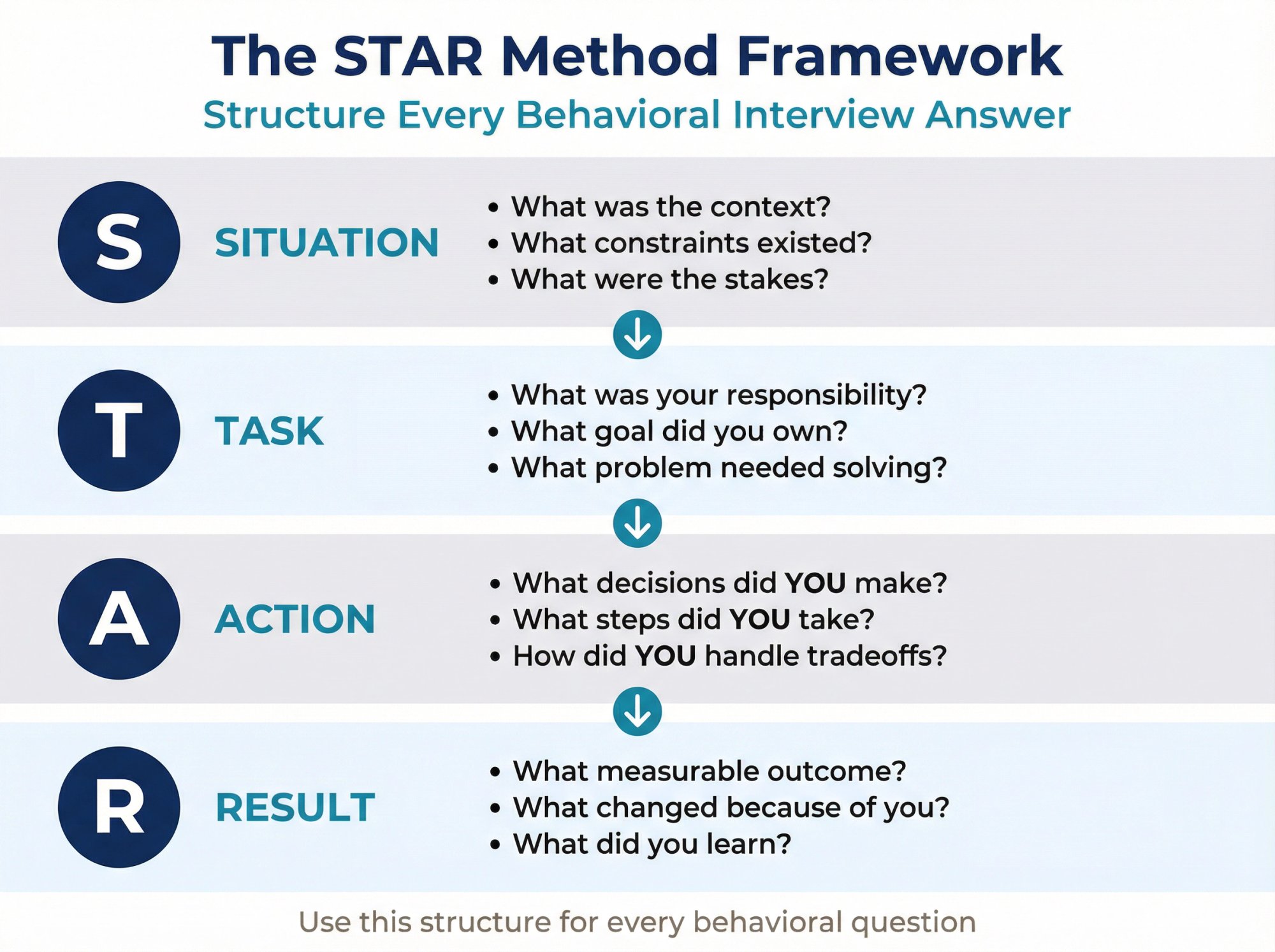

Think of STAR as a compression algorithm for experience.

Your real experience is messy. Dozens of actions, side conversations, partial failures, tradeoffs. Interviewers don't have time for the full movie. They need the trailer plus proof.

STAR forces your brain to output the minimum information required to judge you:

Interview preparation guides describe STAR as the technique for answering behavioral and situational questions. Harvard Business Review frames it as a way to avoid rambling and choose the right details under pressure.

And yes, big companies explicitly train candidates to use it. Amazon's interview guide calls STAR the cornerstone of its interviews.

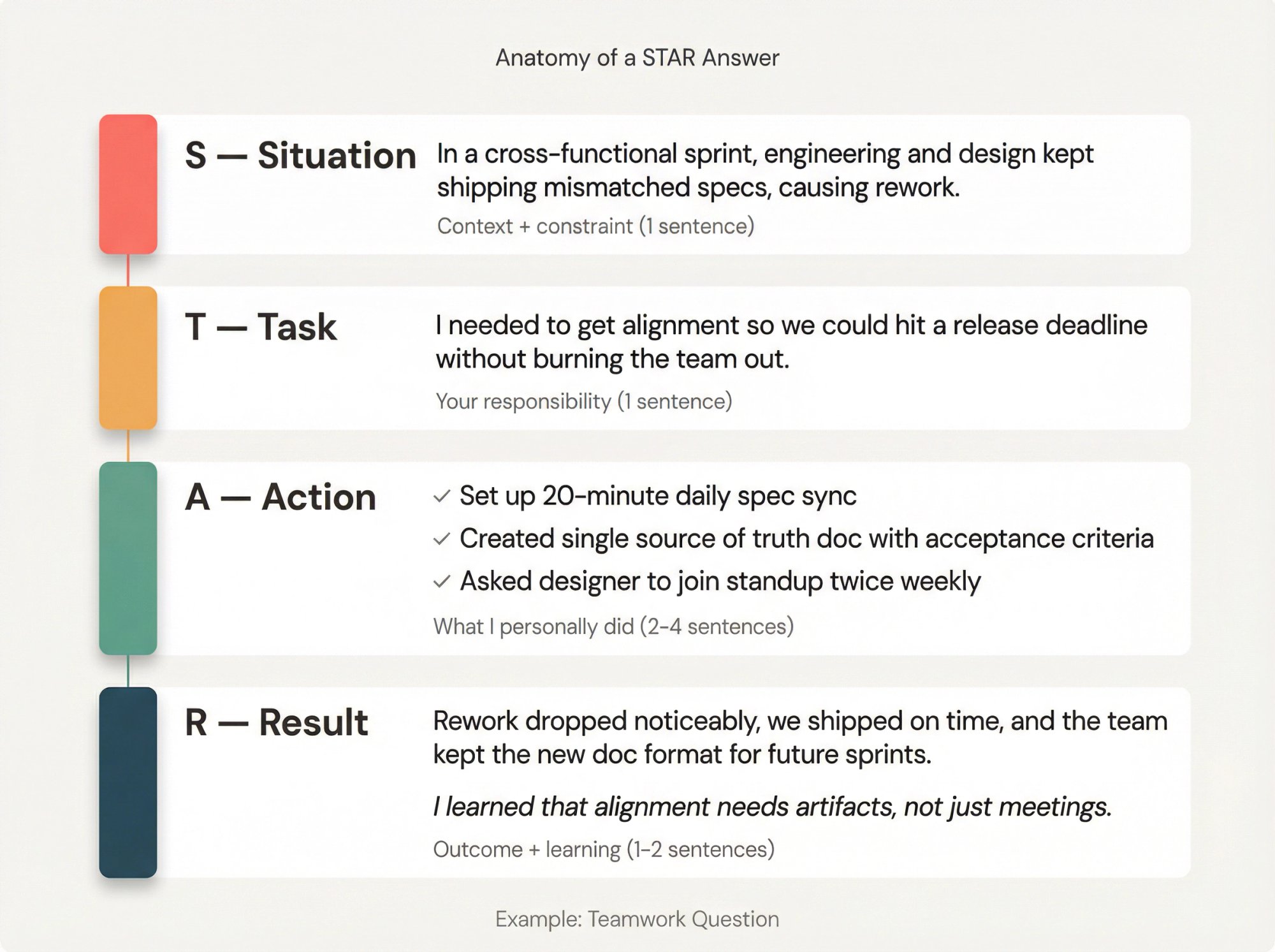

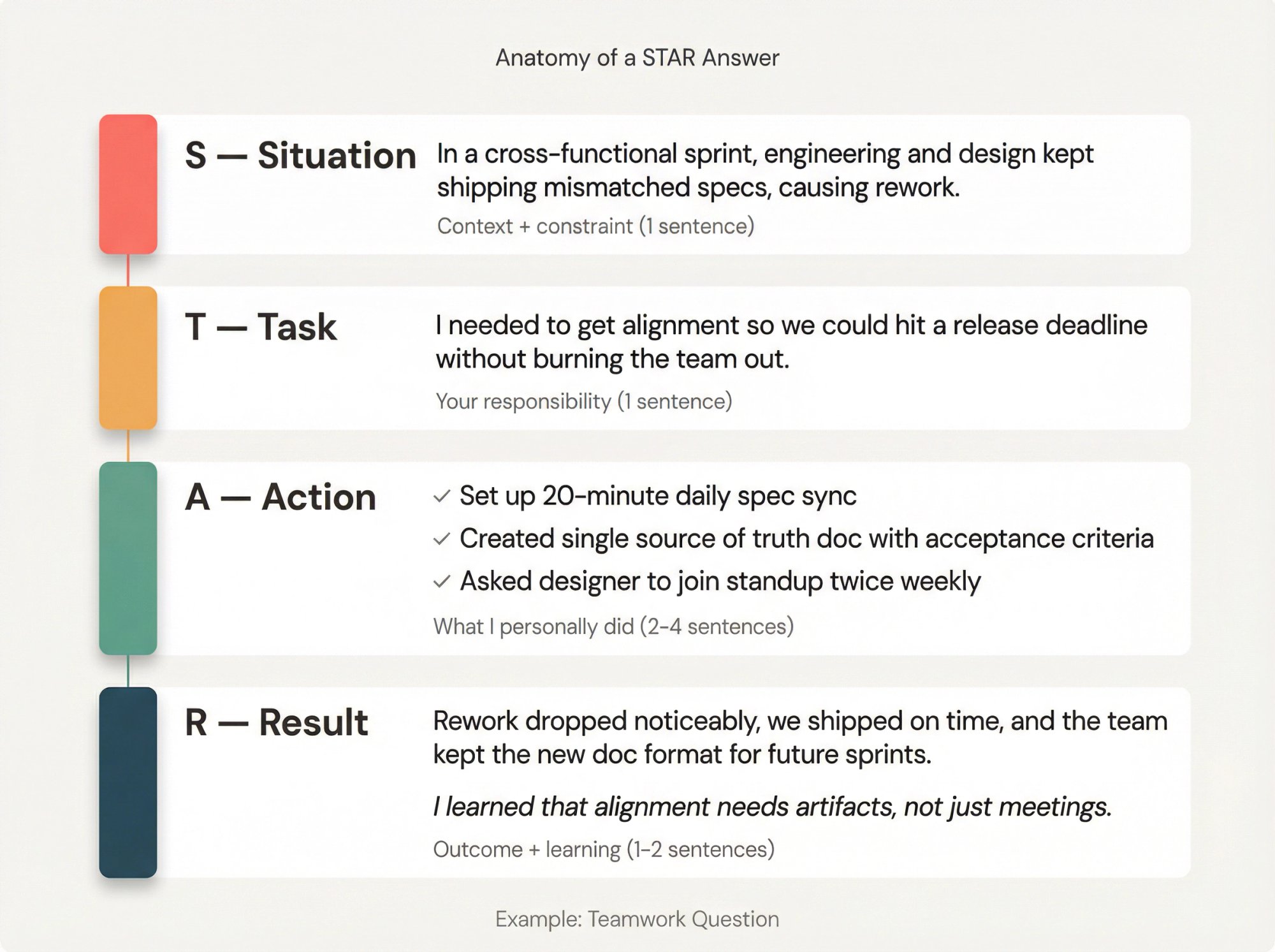

How to Structure Perfect STAR Answers Every Time

Most people fail STAR by doing one of these:

→ Spending 70% of the answer on Situation

→ Describing what "we" did, not what I did

→ Skipping Results or giving vibes instead of proof

→ Talking like a resume, not a story

Use This 4-Sentence Skeleton Instead

Situation (1 sentence): "At [company/team], we faced [problem] with [constraint/stakes]."

Task (1 sentence): "I was responsible for [goal] by [deadline/quality bar]."

Action (2 to 4 sentences): "I did [step 1], [step 2], [step 3], and coordinated with [stakeholders] to handle [tradeoff]."

Result (1 to 2 sentences): "We got [metric/outcome]. I learned [lesson] and changed [behavior]."

Add One Line to Make It Memorable

A common critique of STAR is that it can miss the learning signal. Interview preparation experts explicitly call out the importance of including what you learned.

You don't need to abandon STAR. Just add a clean final line:

"Since then, I [changed process], which prevented [problem] from repeating."

That single line often separates "solid" from "hire."

How to Build a Story Bank That Actually Works

Here's the uncomfortable truth: It's not about memorizing 30 answers. It's about selecting 8 to 10 stories that can be reused for 80% of questions.

Structure matters, but at higher stakes, story selection can matter more than perfectly following a format.

Your Story Bank Target: 8 to 10 Stories

Pick stories that cover these dimensions:

Conflict or disagreement

Failure or mistake

Learning something fast

Leading without authority

Driving a process improvement

Handling pressure or a deadline

Delivering impact with limited resources

Influencing a stakeholder (client, exec, partner)

A customer-focused win

A values or ethics moment (integrity test)

Copy-Paste Story Bank Template

Use this as a working doc:

STORY TITLE:ROLE + CONTEXT:PRIMARY SKILL SIGNAL: ownership | collaboration | problem solving | resilience | growth | leadership | communicationSITUATION (1 to 2 lines):TASK (1 line):ACTIONS (3 to 6 bullets):- Decision I made:- What I did first:- How I aligned people:- How I handled tradeoffs:- Tools/approach:RESULTS (2 to 3 bullets):- Metric impact:- Business impact:- People/quality impact:LESSON (1 line):EVIDENCE I CAN MENTION (links, doc names, dashboards, feedback, awards):LIKELY FOLLOW-UPS (2 to 3):- What would you do differently?- How did you measure success?- What did you learn?How Long Should STAR Interview Answers Be?

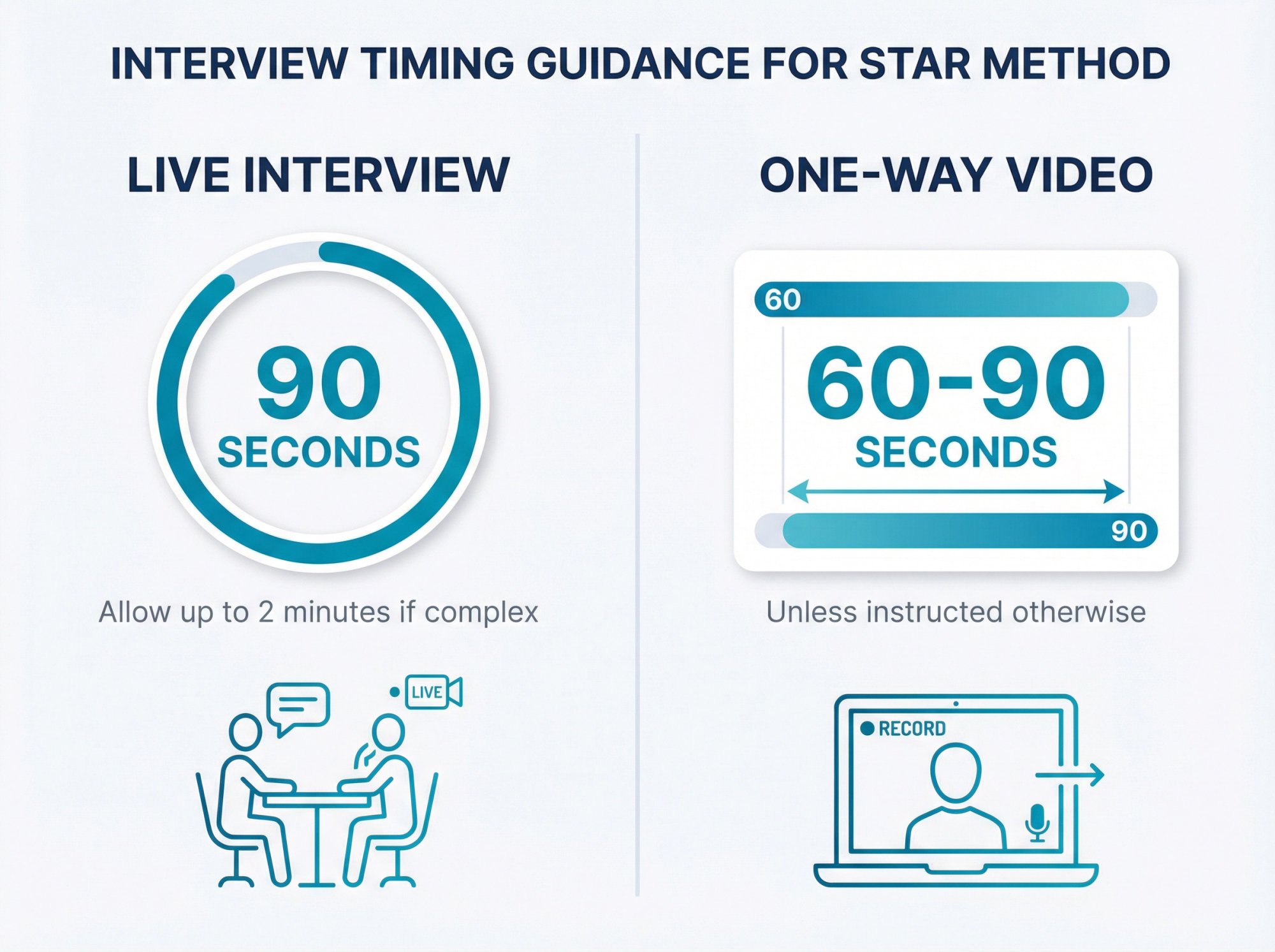

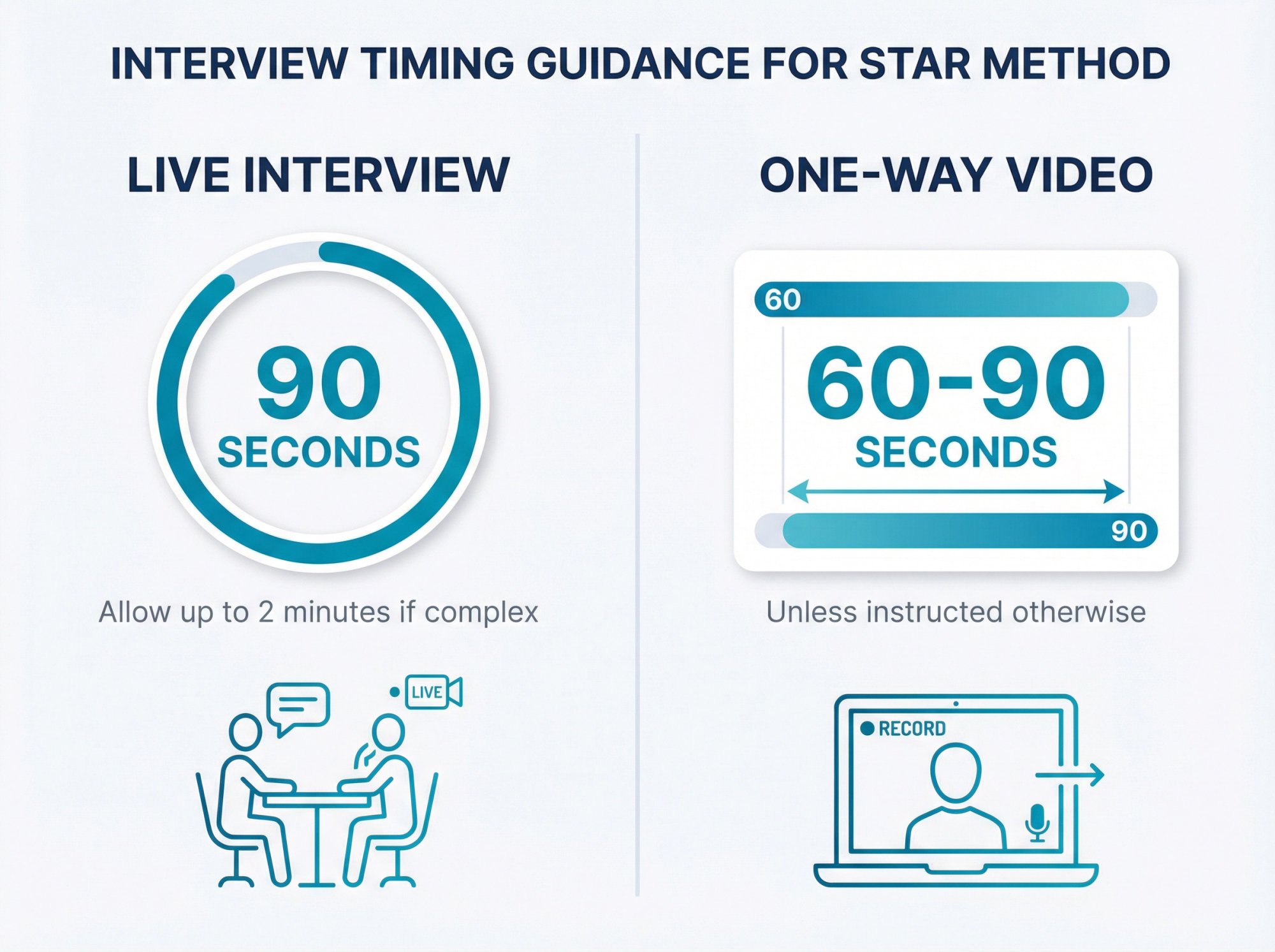

Two practical constraints:

You need enough detail to be credible

You cannot monopolize the interview

If you're in a one-way video interview, tighter is usually better.

The Safest Pacing Rule

Live interview: aim for 90 seconds, allow up to 2 minutes if complex

One-way video: aim for 60 to 90 seconds unless instructed otherwise

30+ STAR Method Interview Examples

These are sample answers. Do not lie. Do not copy word-for-word. Use the structure, then swap in your real details.

1. Teamwork: "Tell me about a time you worked on a team."

What they're testing: collaboration, role clarity, coordination

Situation: In a cross-functional sprint, engineering and design kept shipping mismatched specs, causing rework.

Task: I needed to get alignment so we could hit a release deadline without burning the team out.

Action: I set up a 20-minute daily "spec sync," created a single source of truth doc with acceptance criteria, and added a lightweight sign-off step before implementation. I also asked the designer to join the first 10 minutes of standup twice a week to catch misunderstandings early.

Result: Rework dropped noticeably, we shipped on time, and the team kept the new doc format for future sprints. I learned that alignment needs artifacts, not just meetings.

2. Conflict: "Tell me about a time you had conflict with a coworker."

What they're testing: maturity, empathy, resolution skill

Situation: A teammate and I disagreed on whether to refactor a module or patch it before a customer deadline.

Task: I had to resolve the disagreement fast without damaging the relationship or the timeline.

Action: I asked for 15 minutes to map options (quickest patch, partial refactor, full refactor). We estimated risk and effort, then I proposed a staged plan: patch now with guardrails, refactor next sprint with tests. I also clarified ownership so the plan didn't become "everyone's job."

Result: We met the deadline and avoided a later regression. The teammate later told me the option framing made the decision feel fair instead of personal.

3. Difficult Stakeholder: "Describe a time you handled a difficult stakeholder."

What they're testing: influence, communication, expectation management

Situation: A sales lead promised a custom feature to a prospect before confirming feasibility, and then pushed engineering daily.

Task: I needed to protect delivery quality while keeping the deal alive.

Action: I scheduled a short triage call, asked for the prospect's true must-haves, and turned it into a "minimum viable" scope we could deliver safely. I gave the sales lead a clear timeline with what was in and out, and I wrote a one-page summary they could send the customer.

Result: The deal progressed without derailing the sprint, and the customer accepted the phased plan. I learned that stakeholders calm down when they get a credible plan, not vague reassurance.

4. Ownership: "Tell me about a time you took initiative."

What they're testing: proactivity, seeing around corners

Situation: Our onboarding had a big drop-off in week one, but no one owned the metrics.

Task: I wanted to find the bottleneck and improve activation without waiting for a formal project.

Action:

① I pulled basic funnel data and identified the biggest drop at the "first successful setup" step

② Ran 5 user calls to understand why

③ Wrote a short proposal: simplify the setup flow, add tooltips, introduce a 2-minute checklist

Result: Activation improved in the following weeks and support tickets about setup decreased. The best part: we finally had a single onboarding dashboard the team used weekly.

5. Failure: "Tell me about a time you failed."

What they're testing: accountability, learning, resilience

Situation: I owned a report that leadership used weekly, but one week the numbers were wrong because a data source changed.

Task: I had to fix the issue fast and prevent it from happening again.

Action: I immediately flagged the error, corrected the report, and traced the root cause to a schema update. Then I added validation checks, documented assumptions, and set up a simple alert when source fields change.

Result: The report regained trust, and we did not repeat the issue. I learned that reliability is part of the deliverable, not an optional extra.

6. Mistake: "Tell me about a time you made a mistake at work."

What they're testing: integrity, recovery, prevention

Situation: I sent a customer-facing email with a wrong date, which caused confusion for a launch.

Task: I needed to correct it quickly and reduce the downstream impact.

Action: I sent a correction within minutes, apologized without over-explaining, and messaged our support team with a script in case customers replied. Afterward, I created a checklist for announcements that required a second set of eyes for dates, pricing, and links.

Result: We limited confusion, and the checklist became standard for future launches. The key lesson: speed of correction matters more than trying to hide the error.

7. Pressure: "Tell me about a time you worked under pressure."

What they're testing: prioritization, calm execution

Task: I needed to stabilize the system and coordinate updates across engineering and support.

Result: We stabilized quickly, reduced repeat tickets, and wrote a postmortem with preventative changes. I learned that clear roles prevent panic.

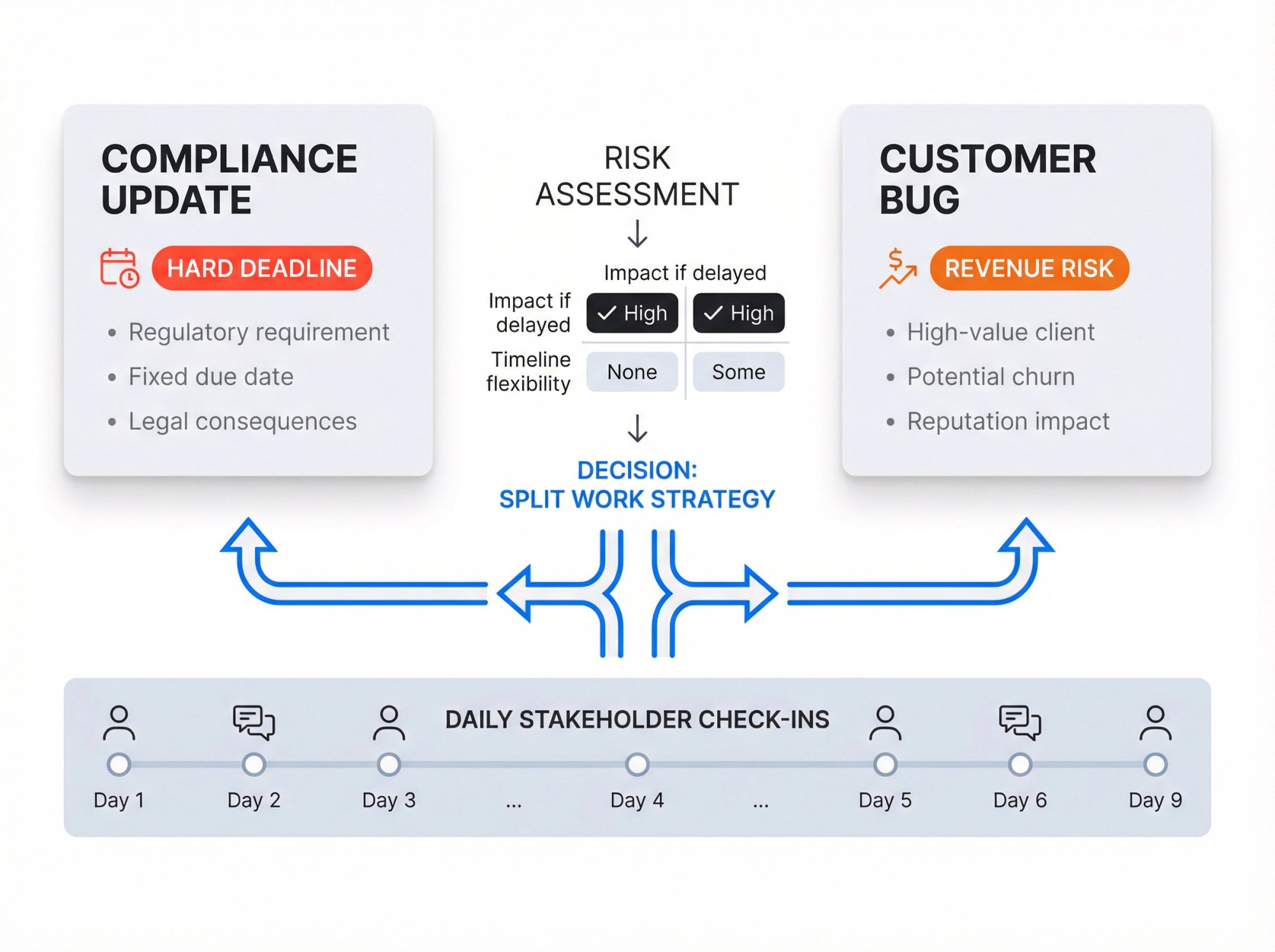

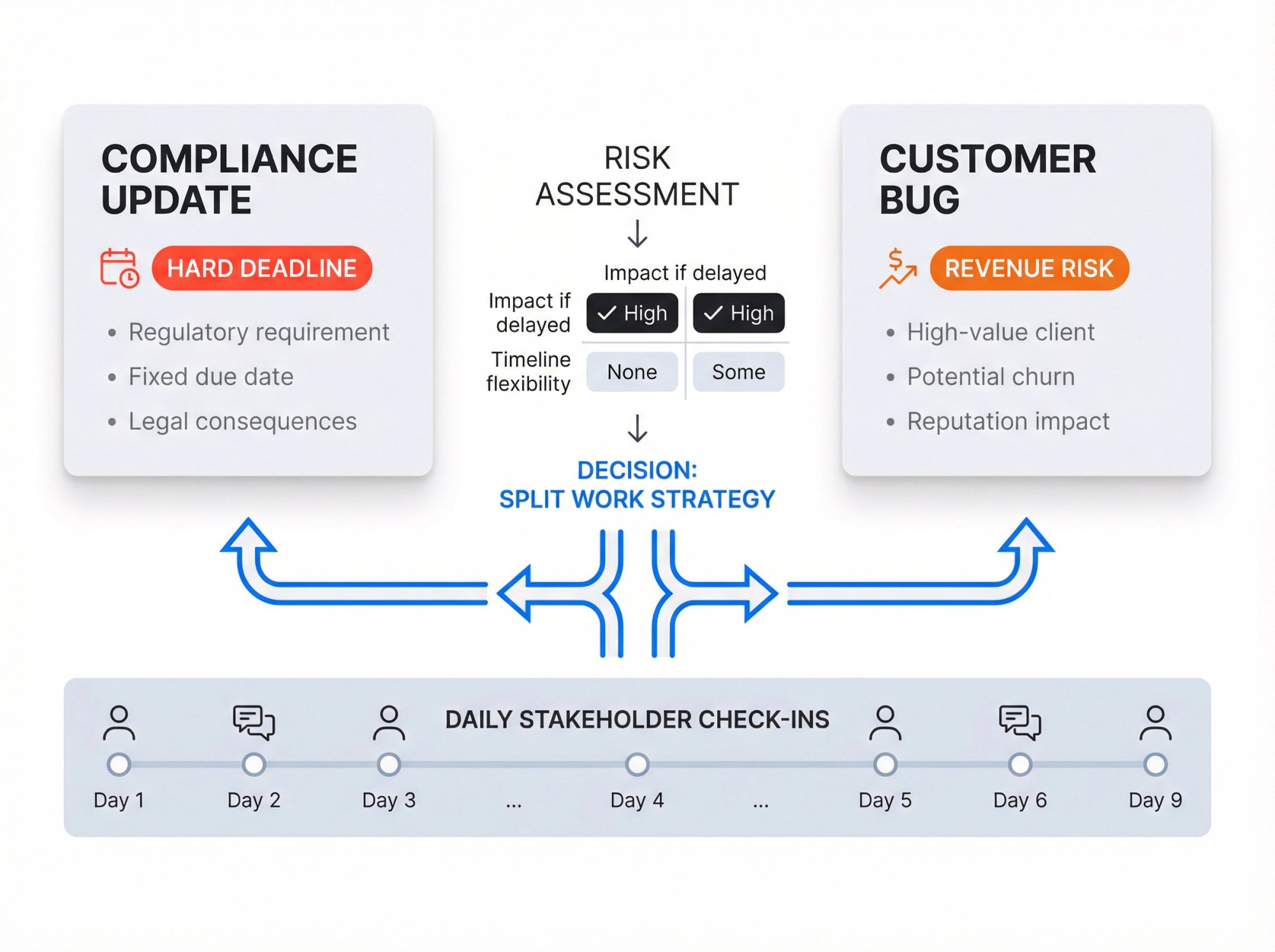

8. Prioritization: "Tell me about a time you had to juggle multiple priorities."

What they're testing: tradeoffs, stakeholder management

Situation: I had two urgent requests: a compliance update and a high-value customer bug.

Task: I needed to decide what to do first and communicate it without frustrating anyone.

Action: I assessed risk (compliance had a hard deadline, bug had revenue risk). I split work: I owned the compliance plan and delegated bug investigation to a teammate, then I created a timeline and checked in with both stakeholders daily.

Result: We met compliance and resolved the bug within the week. I learned to separate "urgent" from "high-risk" and communicate the logic early.

9. Learning Fast: "Tell me about a time you had to learn something quickly."

What they're testing: learning agility, resourcefulness

Situation: I was asked to support a client integration using a tool I had never used before.

Task: I needed to become productive in days, not weeks.

Action:

→ Broke it into learning milestones (basic setup, common failure modes, working demo)

→ Used vendor docs, watched two short tutorials

→ Scheduled a 30-minute call with someone internally who had done it before

→ Documented the setup as I went

Result: The integration went live on time, and my documentation cut future setup time for the team.

10. Feedback: "Tell me about a time you received constructive criticism."

What they're testing: coachability, self-awareness

Situation: My manager told me my updates were detailed but too long, which slowed decision-making.

Task: I needed to communicate more effectively without losing accuracy.

Action: I started using a 3-part update format (headline, key data, decision needed). I also timeboxed written updates to 5 minutes and moved supporting detail into a link or appendix.

Result: Meetings got faster and I was asked to present more often because stakeholders trusted the clarity. The lesson: communication is a product, not a dump of facts.

11. Above and Beyond: "Tell me about a time you went above and beyond."

What they're testing: initiative, but also boundaries and judgment

Situation: A teammate went offline unexpectedly during a critical customer deliverable.

Task: I needed to keep the delivery moving without creating chaos.

Action: I took over the highest-risk pieces, communicated status to the customer, and wrote a short plan for the remaining work so others could jump in. I also documented what I changed so the teammate could re-enter smoothly.

Result: The customer delivery stayed on track and the team avoided duplicated work.

"Above and beyond" is best when it reduces risk and increases clarity, not when it creates hero dependency.

12. Communication: "Explain something complex to a non-technical person."

What they're testing: clarity, empathy, teaching

Situation: A non-technical stakeholder was confused about why a feature would take multiple sprints.

Task: I needed them to understand the constraints so we could agree on scope.

Action: I used an analogy tied to their world, then showed a simple diagram (input, processing, edge cases, testing). I emphasized what we could ship first versus what needed deeper work, and asked them to restate the plan to confirm understanding.

Result: We aligned on a phased approach, fewer last-minute changes happened, and the stakeholder became a stronger partner in later planning.

13. Decision-Making: "Tell me about a tough decision you made."

What they're testing: judgment, tradeoffs

Task: I had to recommend an approach to leadership.

Action: I mapped the risks above, then recommended delaying by one week to add monitoring and a rollback path. I proposed a smaller release slice to keep momentum.

Result: The release was stable and easier to support. Lesson: speed without safety creates hidden costs.

14. Ambiguity: "Tell me about working with unclear requirements."

What they're testing: problem framing, asking the right questions

Situation: A leader asked for "a dashboard" but could not define the decisions it should support.

Task: I needed to clarify the real need before building the wrong thing.

Action: I asked what decisions they wanted to make weekly, what metrics they trusted, and what would change if the number moved. I mocked up two simple versions, got feedback fast, and then built the smallest dashboard that answered the top three decisions.

Result: Adoption was high because it matched real workflow. Clarity comes from decision questions, not feature requests.

15. Process Improvement: "Describe a time you improved a process."

What they're testing: operational thinking, continuous improvement

Situation: Our handoffs between sales and implementation caused missed requirements and rework.

Task: I wanted to reduce rework without slowing the pipeline.

Action: I analyzed the last 10 projects, found repeated missing fields, and created a standardized intake form plus a 15-minute kickoff script. I also added a "definition of ready" checklist.

Result: Rework dropped and projects started cleaner. Small standardization can remove a lot of pain.

16. Customer Service: "Tell me about a difficult customer."

What they're testing: empathy, de-escalation, problem solving

Situation: A customer was angry about repeated delays and threatened to churn.

Task: I needed to calm the situation and fix the underlying issue.

Action:

① Acknowledged their frustration and asked for the impact on their workflow

② Proposed a clear plan: immediate workaround, escalation path, promised update cadence

③ Internally worked with engineering to identify the cause

④ Gave the customer transparent timelines

Result: The customer stayed, and we turned their feedback into a product fix. Escalation works best when paired with a communication rhythm.

17. Sales: "Tell me about handling a tough objection."

What they're testing: persuasion, listening, value framing

Situation: A prospect said our solution was "too expensive" compared to a competitor.

Task: I needed to uncover the real objection and keep the deal moving.

Action: I asked what success looked like and what failure would cost them. Then I reframed pricing around total cost (implementation time, support load, risk reduction). I offered a smaller initial package tied to a measurable outcome.

Result: We got agreement to a pilot instead of losing the deal. Objections often mean "I do not see the ROI yet."

18. Marketing: "Tell me about a campaign that didn't perform."

What they're testing: iteration, data-driven thinking

Situation: A paid campaign had strong clicks but weak conversions.

Task: I needed to diagnose the funnel and improve conversion without increasing spend.

Action: I audited landing page load time and message match, then ran an A/B test on the headline and CTA. I also tightened targeting to reduce low-intent traffic.

Result: Conversion improved and cost per qualified lead dropped. Traffic metrics are not success metrics.

19. Product: "Tell me about prioritizing features."

What they're testing: prioritization, stakeholder alignment

Situation: Engineering capacity was tight, and three teams wanted their feature "now."

Task: I had to recommend a priority order that the org could accept.

Action: I created a lightweight scoring model (customer impact, revenue risk, effort, strategic alignment). I shared the scores transparently, got input from stakeholders, and aligned on a phased roadmap.

Result: We reduced conflict because decisions felt principled, not political. Transparency beats persuasion when everyone is stressed.

20. Engineering: "Tell me about debugging a hard problem."

What they're testing: systematic thinking, persistence

Situation: We had intermittent timeouts that only happened under peak load.

Task: I needed to identify root cause and prevent recurrence.

Action:

→ Reproduced the issue using load tests

→ Added tracing to isolate latency spikes

→ Found a dependency call that degraded under specific concurrency

→ Implemented caching and added a circuit breaker

Result: Timeouts dropped and performance stabilized under load. Hard bugs become solvable when you can make them predictable.

21. Data: "Tell me about using data to influence a decision."

What they're testing: analytical reasoning, persuasion

Situation: Leadership debated whether to expand a feature or fix reliability issues first.

Task: I needed to provide evidence that clarified the tradeoff.

Action: I analyzed support tickets, churn reasons, and usage data, and showed that reliability issues affected a small group but drove disproportionate churn risk. I proposed a two-week reliability sprint with a measurable target.

Result: We prioritized reliability, churn stabilized, and future feature adoption improved. Data is persuasive when tied to business outcomes.

22. Operations: "Tell me about a logistics disruption."

What they're testing: calm execution, contingency planning

Situation: A supplier delay threatened a customer delivery schedule.

Task: I needed to protect delivery dates while minimizing extra cost.

Action: I identified alternative suppliers, renegotiated partial shipments, and updated customers proactively with options. I also created a buffer-stock rule for future peak periods.

Result: Most deliveries stayed on time and we reduced future disruptions. Ops is risk management with relationships.

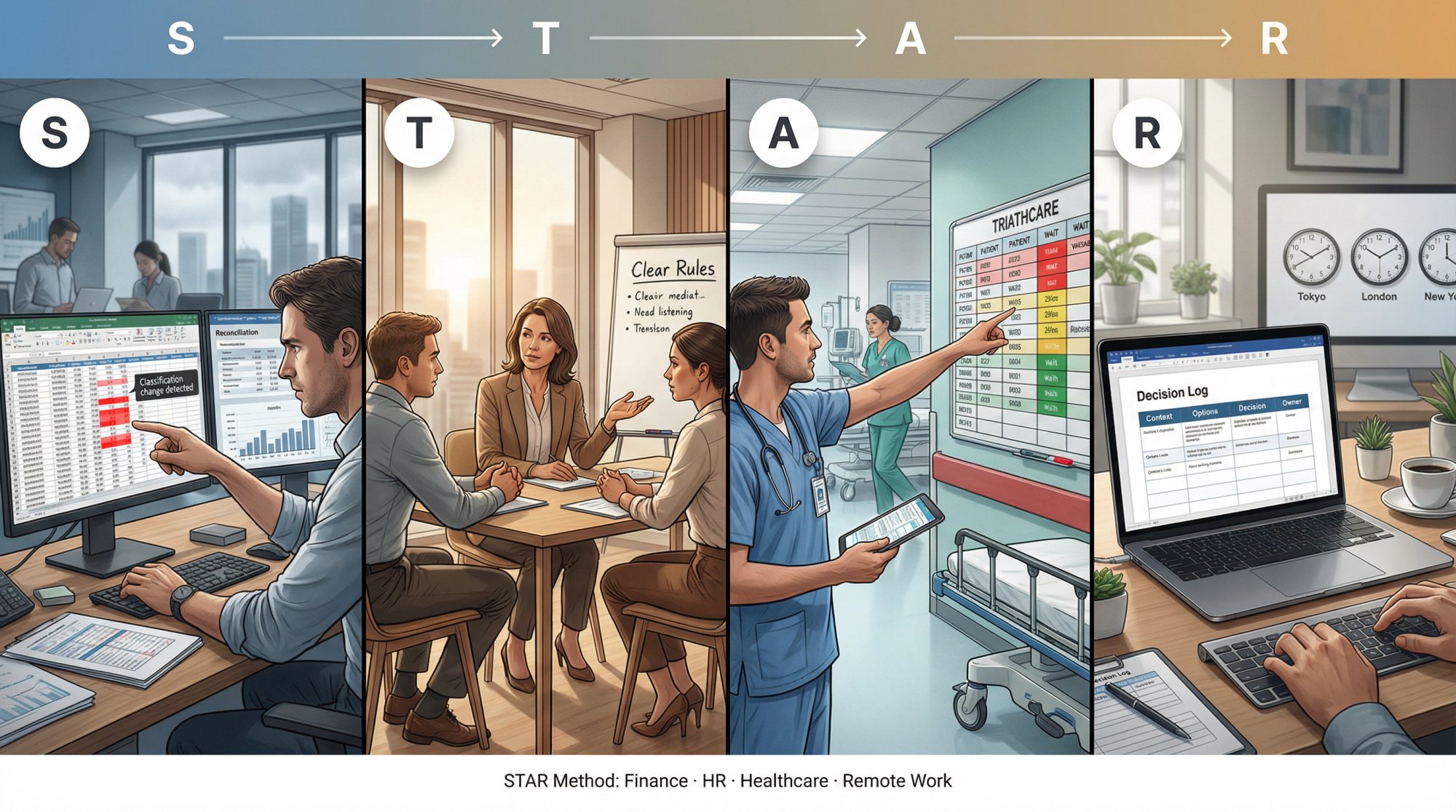

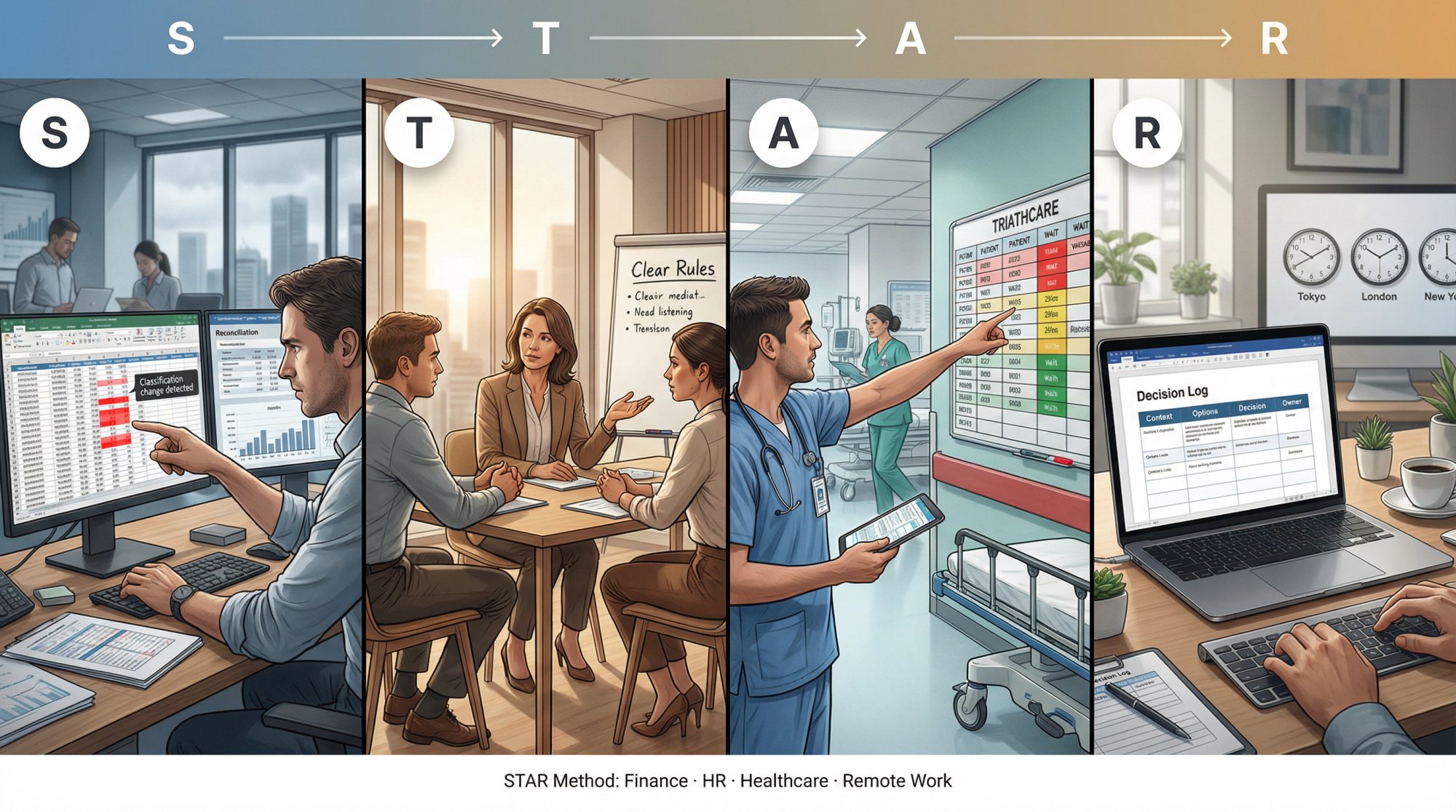

23. Finance: "Tell me about finding an error in financial data."

What they're testing: attention to detail, integrity, problem solving

Situation: A monthly close report showed a variance that didn't match operational reality.

Task: I had to find the cause before leadership made decisions on bad data.

Action: I traced the variance to a classification change and reconciled transactions across two systems. Then I updated the mapping rule and documented the change for the team.

Result: The report became consistent and close went smoother the next month. Small data definition changes can cause big business confusion.

24. HR: "Tell me about handling a sensitive situation."

What they're testing: discretion, fairness, communication

Situation: Two team members escalated a conflict that was affecting morale.

Task: I needed to de-escalate and restore working norms without taking sides.

Action: I spoke to each person separately to understand facts and impact, then facilitated a structured conversation with clear rules (specific behaviors, expected changes, follow-up checkpoints).

Result: Collaboration improved and the team moved forward. Fairness is about process as much as outcome.

25. Healthcare: "Tell me about a high-stress situation."

What they're testing: prioritization, composure, protocol thinking

Situation: During a busy shift, two urgent needs arrived at once with limited staff.

Task: I had to prioritize safely and coordinate help.

Action: I followed triage protocol, delegated tasks based on skill, and communicated quickly and calmly. I documented actions clearly to keep continuity.

Result: We maintained safe care and reduced confusion. Under stress, protocols exist to protect good judgment.

26. Entry-Level: "Tell me about leading a project."

What they're testing: leadership potential, coordination

Situation: In a university group project, the team had no plan and deadlines were slipping.

Task: I needed to organize the work and get us to submission quality.

Action:

① Broke the project into tasks with assigned owners

② Set weekly checkpoints

③ Created a shared doc so everyone worked from the same source

④ Raised an early issue when a section was off-track and helped rewrite it with the teammate

Result: We submitted on time and received strong feedback. Leadership starts with clarity and follow-through, not titles.

27. Remote Work: "Tell me about working effectively remotely."

What they're testing: async communication, independence

Situation: Our team was distributed across time zones, and decisions kept getting lost in chats.

Task: I needed to improve coordination without adding meetings.

Action: I introduced decision logs (one short post per decision with context, options, final call). I also used short video updates for complex topics and set response-time expectations.

Result: Fewer misunderstandings, faster execution, and less repeated discussion. Remote work rewards written clarity.

28. Change: "Tell me about adapting to a big change."

What they're testing: resilience, flexibility

Situation: Mid-quarter, leadership changed priorities, and our project was paused.

Task: I needed to shift quickly without wasting work.

Action: I summarized what we had learned so far, identified reusable components, and proposed a smaller deliverable that still created value under the new priority. I helped the team re-plan with minimal thrash.

Result: We avoided sunk-cost spirals and shipped something useful anyway. Adaptability is turning change into a smaller, winnable plan.

29. Ethics: "Tell me about choosing between easy and right."

What they're testing: integrity, courage, judgment

Situation: A teammate suggested presenting a metric in a way that made results look better but was misleading.

Task: I needed to protect trust and decision quality.

Action: I raised the concern privately, explained how it could mislead leadership, and proposed a transparent framing (show both metrics and clarify tradeoffs). If they refused, I was prepared to escalate.

Result: We presented the honest version, and leadership made a better decision. Trust is hard to win and easy to lose.

30. "Why should we hire you?" (using STAR)

What they're testing: relevance, self-awareness, ability to connect story to role

Situation: In my last role, I inherited a messy workflow where tasks slipped because ownership was unclear.

Task: I needed to create a system that improved execution without creating bureaucracy.

Action: I defined ownership rules, introduced a simple weekly planning ritual, and created a visible tracker so blockers surfaced early. I coached the team on how to use it, not just what it was.

Result: Delivery became predictable and stakeholders regained confidence. That's why I'm a fit here: this role needs someone who can bring clarity, align people, and execute.

How to Ace One-Way Video Interviews with STAR

One-way video interviews often use predetermined questions and require clear, structured answers. Video interview platforms stress that video interviews reduce opportunities to clarify misunderstandings, so you need clear, concise, structured responses.

Practical implication: write your STAR answers so they still make sense without follow-up questions.

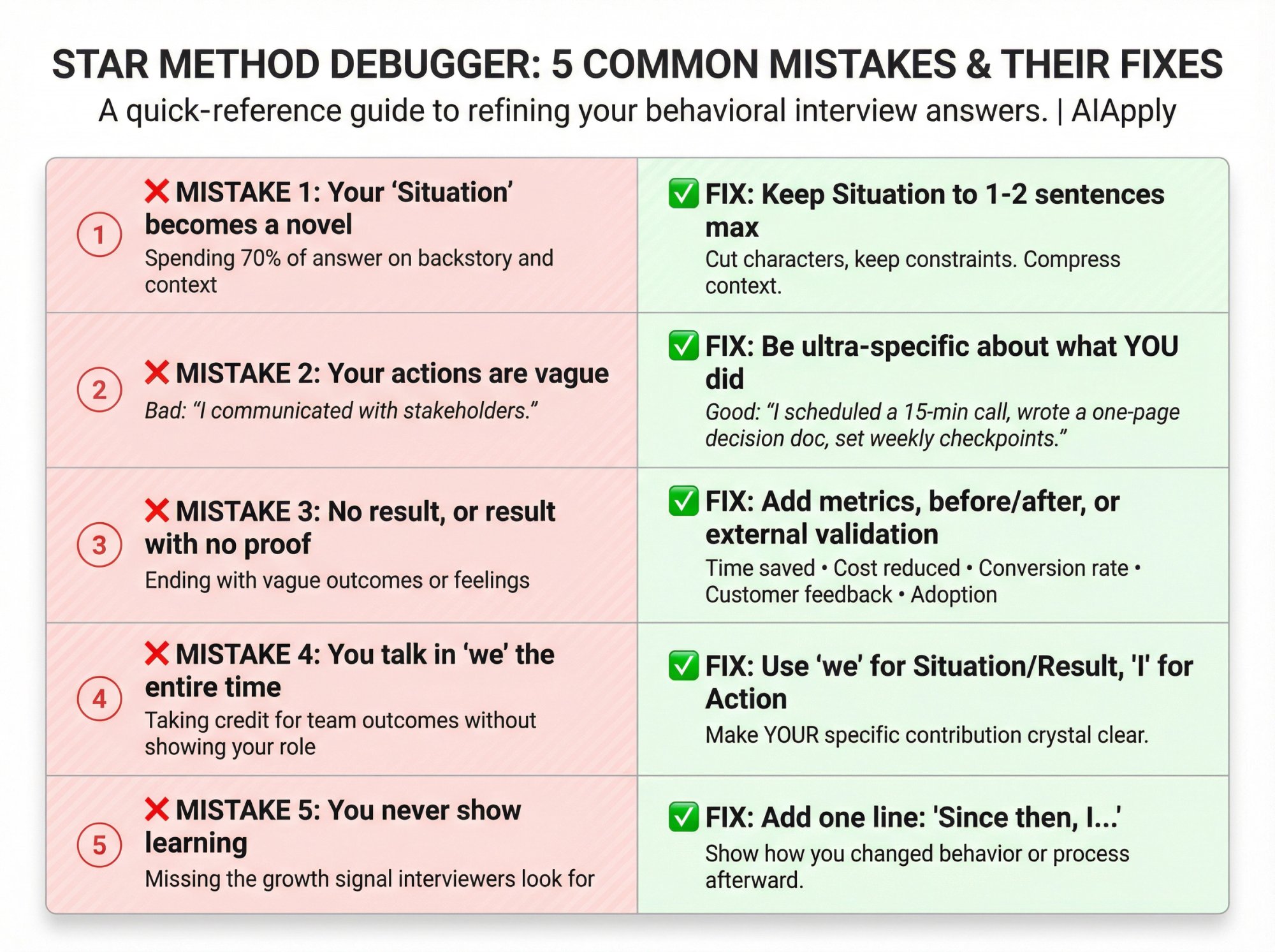

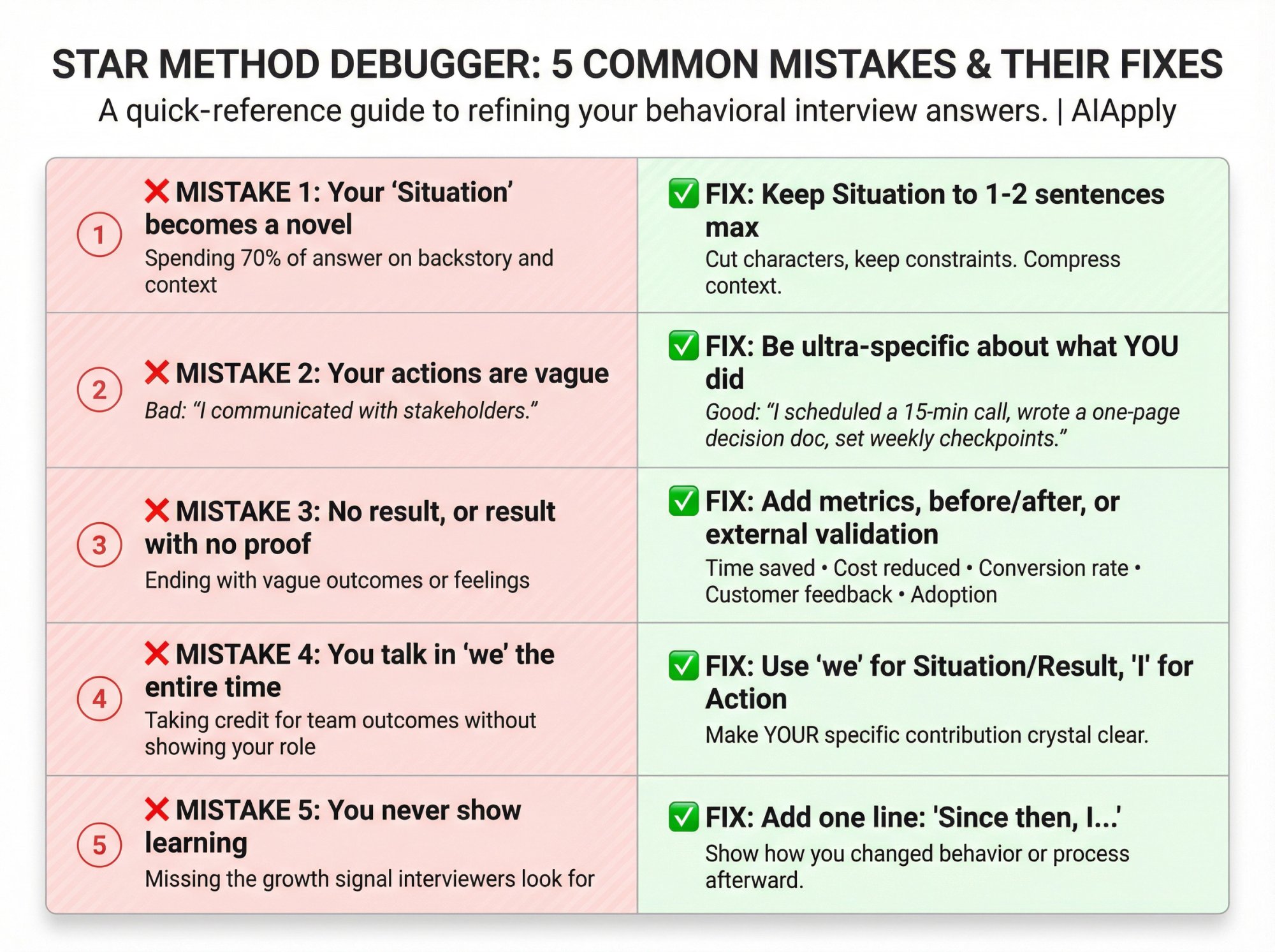

Common STAR Mistakes and How to Fix Them

Mistake 1: Your "Situation" becomes a novel

Fix: If the situation needs more than 2 sentences, you are not compressing. Cut characters, keep constraints.

Mistake 2: Your actions are vague

Bad: "I communicated with stakeholders."

Good: "I scheduled a 15-minute alignment call, wrote a one-page decision doc, and set weekly checkpoints with owners."

Mistake 3: No result, or a result with no proof

Fix: Add one of these:

• Metric (time, cost, conversion, error rate, cycle time)

• Before/after comparison

• External validation (customer feedback, leadership decision, adoption)

Mistake 4: You talk in "we" the entire time

Fix: You can say "we" in Situation and Result. In Action, say I.

Mistake 5: You never show learning

Fix: Add one line: "Since then, I..."

7-Day STAR Practice Plan Using AIApply

If you want a system instead of random practice, follow this:

Day 1: Build your story bank

Write 10 story titles using the template above.

Day 2: Match stories to the job description

Look for repeated words in the job ad (ownership, stakeholder, ambiguity, customer, pace). Map each to a story.

Day 3: Write 60-second versions

Use the 4-sentence skeleton.

Day 4: Write 120-second versions

Add one more Action detail and one more Result proof point.

Day 5: Drill under time pressure

Record yourself answering 10 prompts with a timer.

Day 6: Simulate the interview

AIApply's Mock Interview Simulator is designed for practicing upcoming interview questions. The AI gives you feedback on your answers, which helps you refine your STAR stories before the real thing.

Day 7: Prepare final-round "judgment" stories

If you want live help during interviews, AIApply's Interview Buddy provides real-time coaching and answers. Use this responsibly: structure and recall support are fair game; inventing facts is not.

Interview Buddy listens to questions in real-time through your browser and suggests structured answers based on your resume and the job description. It's like having an interview coach in your ear, helping you recall your best stories when nerves might make you blank.

Frequently Asked Questions About STAR Method

Q: Can I use the same STAR story for multiple questions?

Yes, absolutely. A well-crafted story about handling a project crisis could work for questions about "significant challenges," "tight deadlines," or "working under pressure." Just adjust which aspects you emphasize. That said, having 8 to 10 distinct stories gives you more flexibility and shows breadth of experience.

Q: What if I don't have work experience for a question?

Use examples from academic projects, volunteer work, sports teams, or personal projects. The key is demonstrating the skill or behavior being tested. Just preface it with context: "I'll draw from my university experience for this one, since it demonstrates..." Employers care about the behavior more than where it happened.

Q: How do I handle questions about failures without looking bad?

Focus on three things: what you learned, what you changed afterward, and (if relevant) a later success that proves you applied the lesson. Everyone fails. What matters is accountability and growth. Frame the result as "we didn't hit the goal, but I learned X, which I applied to Y project where we succeeded."

Q: Should I memorize my STAR answers word-for-word?

No. Memorized answers sound robotic and you'll stumble if the wording throws you off. Instead, know your story structure cold (the situation, your specific actions, the outcome), and practice telling it naturally multiple times. You want to hit the key points consistently, but with natural language.

Q: What if the interviewer interrupts my STAR answer?

That's actually common, especially if they want to dig deeper into something you said. Don't panic. Answer their question, then offer to continue if they want more detail. Interruptions can actually be good: they show engagement. Just make sure you've at least mentioned the Result before they cut you off, so they know the story had a positive outcome.

Q: How technical should I get in my STAR answers?

Match your audience. If you're talking to a technical manager, you can use some jargon (but still explain the impact). If you're talking to HR or a non-technical executive, keep it high-level and focus on business outcomes. When in doubt, lead with impact and offer to go deeper if they want details.

Q: Can I use STAR for questions that aren't "Tell me about a time..."?

You can adapt it. If they ask "How do you handle conflict?" you could say "Let me give you a specific example" and launch into a STAR story. Real examples are always stronger than theoretical answers. If they specifically want theoretical advice, give it, but try to ground it in a quick story if possible.

Q: What if I get a question I haven't prepared for?

Take a breath and think for 5 to 10 seconds. It's better to pause than to ramble. Try to map the question to one of your prepared stories (what skill are they really testing?). If you genuinely have no relevant experience, acknowledge it honestly and either share a related example or explain how you'd approach the situation based on principles you follow.

Q: How do I practice STAR if I don't have anyone to do mock interviews with?

Use AIApply's Mock Interview Simulator. It asks common behavioral questions and evaluates your answers using AI. You can also record yourself on your phone, watch it back, and critique yourself. Write down your answers, read them out loud, time yourself. Repetition builds confidence.

Q: Should I bring notes to the interview with my STAR stories?

For phone or video interviews, having brief bullet points nearby can help if you blank (just don't read them verbatim). For in-person interviews, you usually can't reference notes while answering. That's why practice is crucial. You want the stories so familiar that you can tell them naturally under pressure.

Q: What if the result of my story wasn't entirely positive?

Be honest about mixed results, but emphasize what you learned and what improved. For example: "We didn't hit the original timeline, but we delivered a higher-quality product and the client gave us a 5-star review. I learned that sometimes the right tradeoff is time for quality, and I've used that judgment on three projects since."

Q: How many STAR examples should I prepare?

Aim for 8 to 10 solid stories that cover different competencies (leadership, teamwork, problem-solving, failure, initiative, conflict, customer focus, adaptability, etc.). This gives you enough variety to handle most behavioral questions without repeating yourself too much.

Q: Can AIApply help with more than just practicing?

Yes. Beyond the Mock Interview Simulator, we offer the Resume Builder to ensure your resume highlights experiences that make great STAR stories, the AI Cover Letter Generator to connect your experience to specific roles, and Auto Apply to get you more interview opportunities to practice your STAR skills. It's a complete system from application to offer.

The Resume Builder specifically helps you identify which experiences from your background will translate into strong STAR stories. It analyzes your work history and suggests which achievements to emphasize based on the skills interviewers care about most.

Q: Is STAR still relevant in 2026?

Absolutely. If anything, it's more important. With AI screening earlier parts of the hiring process, the human interview is where you differentiate yourself. STAR helps you tell memorable, evidence-based stories that prove you can do the job. Companies aren't moving away from behavioral interviews; they're getting more sophisticated about evaluating them.

When you Google "STAR method interview examples," you're probably tired of freezing when someone asks "Tell me about a time when..." You need concrete answers, not theory. You need to see what good actually looks like.

This guide gives you both. You'll walk away with 30+ realistic STAR examples you can adapt right now, plus a story bank system that works for any interview.

Here's what you're getting:

Why STAR works (from first principles, not buzzwords)

A story bank template you'll actually use (8 to 10 stories that cover 80% of questions)

30+ detailed STAR examples across different roles and scenarios

A 7-day practice plan using AIApply's Mock Interview Simulator

The job market in 2026 is more automated than ever. A 2025 systematic review in F1000Research examined factors affecting interview validity and found that structure matters enormously. Being clear, structured, and evidence-based matters more than it used to.

AI tools can help you prepare systematically. AIApply's platform combines resume optimization, mock interview practice, and real-time coaching to give you an end-to-end interview prep system.

What Do Interviewers Look for in Behavioral Questions?

When someone asks "Tell me about a time you handled conflict," they're not looking for your philosophy on workplace harmony. They want evidence.

Behavioral questions test whether you can extract the signal from the noise of your own experience. Interviewers are trying to see:

• Context (what kind of environment were you operating in?)

• Constraints (what made it hard?)

• Decision-making (what did you choose, and why?)

• Execution (what did you do that changed reality?)

• Impact (what measurable outcome happened because of you?)

A 2025 systematic review in F1000Research examined factors affecting interview validity and found that structure matters enormously. Unstructured interviews are notoriously unreliable. Companies use behavioral questions because they want consistent, comparable signals across candidates.

This is why "structured interviews" exist. It's also why learning STAR isn't optional anymore.

What Is the STAR Method and How Does It Work?

Think of STAR as a compression algorithm for experience.

Your real experience is messy. Dozens of actions, side conversations, partial failures, tradeoffs. Interviewers don't have time for the full movie. They need the trailer plus proof.

STAR forces your brain to output the minimum information required to judge you:

Interview preparation guides describe STAR as the technique for answering behavioral and situational questions. Harvard Business Review frames it as a way to avoid rambling and choose the right details under pressure.

And yes, big companies explicitly train candidates to use it. Amazon's interview guide calls STAR the cornerstone of its interviews.

How to Structure Perfect STAR Answers Every Time

Most people fail STAR by doing one of these:

→ Spending 70% of the answer on Situation

→ Describing what "we" did, not what I did

→ Skipping Results or giving vibes instead of proof

→ Talking like a resume, not a story

Use This 4-Sentence Skeleton Instead

Situation (1 sentence): "At [company/team], we faced [problem] with [constraint/stakes]."

Task (1 sentence): "I was responsible for [goal] by [deadline/quality bar]."

Action (2 to 4 sentences): "I did [step 1], [step 2], [step 3], and coordinated with [stakeholders] to handle [tradeoff]."

Result (1 to 2 sentences): "We got [metric/outcome]. I learned [lesson] and changed [behavior]."

Add One Line to Make It Memorable

A common critique of STAR is that it can miss the learning signal. Interview preparation experts explicitly call out the importance of including what you learned.

You don't need to abandon STAR. Just add a clean final line:

"Since then, I [changed process], which prevented [problem] from repeating."

That single line often separates "solid" from "hire."

How to Build a Story Bank That Actually Works

Here's the uncomfortable truth: It's not about memorizing 30 answers. It's about selecting 8 to 10 stories that can be reused for 80% of questions.

Structure matters, but at higher stakes, story selection can matter more than perfectly following a format.

Your Story Bank Target: 8 to 10 Stories

Pick stories that cover these dimensions:

Conflict or disagreement

Failure or mistake

Learning something fast

Leading without authority

Driving a process improvement

Handling pressure or a deadline

Delivering impact with limited resources

Influencing a stakeholder (client, exec, partner)

A customer-focused win

A values or ethics moment (integrity test)

Copy-Paste Story Bank Template

Use this as a working doc:

STORY TITLE:ROLE + CONTEXT:PRIMARY SKILL SIGNAL: ownership | collaboration | problem solving | resilience | growth | leadership | communicationSITUATION (1 to 2 lines):TASK (1 line):ACTIONS (3 to 6 bullets):- Decision I made:- What I did first:- How I aligned people:- How I handled tradeoffs:- Tools/approach:RESULTS (2 to 3 bullets):- Metric impact:- Business impact:- People/quality impact:LESSON (1 line):EVIDENCE I CAN MENTION (links, doc names, dashboards, feedback, awards):LIKELY FOLLOW-UPS (2 to 3):- What would you do differently?- How did you measure success?- What did you learn?How Long Should STAR Interview Answers Be?

Two practical constraints:

You need enough detail to be credible

You cannot monopolize the interview

If you're in a one-way video interview, tighter is usually better.

The Safest Pacing Rule

Live interview: aim for 90 seconds, allow up to 2 minutes if complex

One-way video: aim for 60 to 90 seconds unless instructed otherwise

30+ STAR Method Interview Examples

These are sample answers. Do not lie. Do not copy word-for-word. Use the structure, then swap in your real details.

1. Teamwork: "Tell me about a time you worked on a team."

What they're testing: collaboration, role clarity, coordination

Situation: In a cross-functional sprint, engineering and design kept shipping mismatched specs, causing rework.

Task: I needed to get alignment so we could hit a release deadline without burning the team out.

Action: I set up a 20-minute daily "spec sync," created a single source of truth doc with acceptance criteria, and added a lightweight sign-off step before implementation. I also asked the designer to join the first 10 minutes of standup twice a week to catch misunderstandings early.

Result: Rework dropped noticeably, we shipped on time, and the team kept the new doc format for future sprints. I learned that alignment needs artifacts, not just meetings.

2. Conflict: "Tell me about a time you had conflict with a coworker."

What they're testing: maturity, empathy, resolution skill

Situation: A teammate and I disagreed on whether to refactor a module or patch it before a customer deadline.

Task: I had to resolve the disagreement fast without damaging the relationship or the timeline.

Action: I asked for 15 minutes to map options (quickest patch, partial refactor, full refactor). We estimated risk and effort, then I proposed a staged plan: patch now with guardrails, refactor next sprint with tests. I also clarified ownership so the plan didn't become "everyone's job."

Result: We met the deadline and avoided a later regression. The teammate later told me the option framing made the decision feel fair instead of personal.

3. Difficult Stakeholder: "Describe a time you handled a difficult stakeholder."

What they're testing: influence, communication, expectation management

Situation: A sales lead promised a custom feature to a prospect before confirming feasibility, and then pushed engineering daily.

Task: I needed to protect delivery quality while keeping the deal alive.

Action: I scheduled a short triage call, asked for the prospect's true must-haves, and turned it into a "minimum viable" scope we could deliver safely. I gave the sales lead a clear timeline with what was in and out, and I wrote a one-page summary they could send the customer.

Result: The deal progressed without derailing the sprint, and the customer accepted the phased plan. I learned that stakeholders calm down when they get a credible plan, not vague reassurance.

4. Ownership: "Tell me about a time you took initiative."

What they're testing: proactivity, seeing around corners

Situation: Our onboarding had a big drop-off in week one, but no one owned the metrics.

Task: I wanted to find the bottleneck and improve activation without waiting for a formal project.

Action:

① I pulled basic funnel data and identified the biggest drop at the "first successful setup" step

② Ran 5 user calls to understand why

③ Wrote a short proposal: simplify the setup flow, add tooltips, introduce a 2-minute checklist

Result: Activation improved in the following weeks and support tickets about setup decreased. The best part: we finally had a single onboarding dashboard the team used weekly.

5. Failure: "Tell me about a time you failed."

What they're testing: accountability, learning, resilience

Situation: I owned a report that leadership used weekly, but one week the numbers were wrong because a data source changed.

Task: I had to fix the issue fast and prevent it from happening again.

Action: I immediately flagged the error, corrected the report, and traced the root cause to a schema update. Then I added validation checks, documented assumptions, and set up a simple alert when source fields change.

Result: The report regained trust, and we did not repeat the issue. I learned that reliability is part of the deliverable, not an optional extra.

6. Mistake: "Tell me about a time you made a mistake at work."

What they're testing: integrity, recovery, prevention

Situation: I sent a customer-facing email with a wrong date, which caused confusion for a launch.

Task: I needed to correct it quickly and reduce the downstream impact.

Action: I sent a correction within minutes, apologized without over-explaining, and messaged our support team with a script in case customers replied. Afterward, I created a checklist for announcements that required a second set of eyes for dates, pricing, and links.

Result: We limited confusion, and the checklist became standard for future launches. The key lesson: speed of correction matters more than trying to hide the error.

7. Pressure: "Tell me about a time you worked under pressure."

What they're testing: prioritization, calm execution

Task: I needed to stabilize the system and coordinate updates across engineering and support.

Result: We stabilized quickly, reduced repeat tickets, and wrote a postmortem with preventative changes. I learned that clear roles prevent panic.

8. Prioritization: "Tell me about a time you had to juggle multiple priorities."

What they're testing: tradeoffs, stakeholder management

Situation: I had two urgent requests: a compliance update and a high-value customer bug.

Task: I needed to decide what to do first and communicate it without frustrating anyone.

Action: I assessed risk (compliance had a hard deadline, bug had revenue risk). I split work: I owned the compliance plan and delegated bug investigation to a teammate, then I created a timeline and checked in with both stakeholders daily.

Result: We met compliance and resolved the bug within the week. I learned to separate "urgent" from "high-risk" and communicate the logic early.

9. Learning Fast: "Tell me about a time you had to learn something quickly."

What they're testing: learning agility, resourcefulness

Situation: I was asked to support a client integration using a tool I had never used before.

Task: I needed to become productive in days, not weeks.

Action:

→ Broke it into learning milestones (basic setup, common failure modes, working demo)

→ Used vendor docs, watched two short tutorials

→ Scheduled a 30-minute call with someone internally who had done it before

→ Documented the setup as I went

Result: The integration went live on time, and my documentation cut future setup time for the team.

10. Feedback: "Tell me about a time you received constructive criticism."

What they're testing: coachability, self-awareness

Situation: My manager told me my updates were detailed but too long, which slowed decision-making.

Task: I needed to communicate more effectively without losing accuracy.

Action: I started using a 3-part update format (headline, key data, decision needed). I also timeboxed written updates to 5 minutes and moved supporting detail into a link or appendix.

Result: Meetings got faster and I was asked to present more often because stakeholders trusted the clarity. The lesson: communication is a product, not a dump of facts.

11. Above and Beyond: "Tell me about a time you went above and beyond."

What they're testing: initiative, but also boundaries and judgment

Situation: A teammate went offline unexpectedly during a critical customer deliverable.

Task: I needed to keep the delivery moving without creating chaos.

Action: I took over the highest-risk pieces, communicated status to the customer, and wrote a short plan for the remaining work so others could jump in. I also documented what I changed so the teammate could re-enter smoothly.

Result: The customer delivery stayed on track and the team avoided duplicated work.

"Above and beyond" is best when it reduces risk and increases clarity, not when it creates hero dependency.

12. Communication: "Explain something complex to a non-technical person."

What they're testing: clarity, empathy, teaching

Situation: A non-technical stakeholder was confused about why a feature would take multiple sprints.

Task: I needed them to understand the constraints so we could agree on scope.

Action: I used an analogy tied to their world, then showed a simple diagram (input, processing, edge cases, testing). I emphasized what we could ship first versus what needed deeper work, and asked them to restate the plan to confirm understanding.

Result: We aligned on a phased approach, fewer last-minute changes happened, and the stakeholder became a stronger partner in later planning.

13. Decision-Making: "Tell me about a tough decision you made."

What they're testing: judgment, tradeoffs

Task: I had to recommend an approach to leadership.

Action: I mapped the risks above, then recommended delaying by one week to add monitoring and a rollback path. I proposed a smaller release slice to keep momentum.

Result: The release was stable and easier to support. Lesson: speed without safety creates hidden costs.

14. Ambiguity: "Tell me about working with unclear requirements."

What they're testing: problem framing, asking the right questions

Situation: A leader asked for "a dashboard" but could not define the decisions it should support.

Task: I needed to clarify the real need before building the wrong thing.

Action: I asked what decisions they wanted to make weekly, what metrics they trusted, and what would change if the number moved. I mocked up two simple versions, got feedback fast, and then built the smallest dashboard that answered the top three decisions.

Result: Adoption was high because it matched real workflow. Clarity comes from decision questions, not feature requests.

15. Process Improvement: "Describe a time you improved a process."

What they're testing: operational thinking, continuous improvement

Situation: Our handoffs between sales and implementation caused missed requirements and rework.

Task: I wanted to reduce rework without slowing the pipeline.

Action: I analyzed the last 10 projects, found repeated missing fields, and created a standardized intake form plus a 15-minute kickoff script. I also added a "definition of ready" checklist.

Result: Rework dropped and projects started cleaner. Small standardization can remove a lot of pain.

16. Customer Service: "Tell me about a difficult customer."

What they're testing: empathy, de-escalation, problem solving

Situation: A customer was angry about repeated delays and threatened to churn.

Task: I needed to calm the situation and fix the underlying issue.

Action:

① Acknowledged their frustration and asked for the impact on their workflow

② Proposed a clear plan: immediate workaround, escalation path, promised update cadence

③ Internally worked with engineering to identify the cause

④ Gave the customer transparent timelines

Result: The customer stayed, and we turned their feedback into a product fix. Escalation works best when paired with a communication rhythm.

17. Sales: "Tell me about handling a tough objection."

What they're testing: persuasion, listening, value framing

Situation: A prospect said our solution was "too expensive" compared to a competitor.

Task: I needed to uncover the real objection and keep the deal moving.

Action: I asked what success looked like and what failure would cost them. Then I reframed pricing around total cost (implementation time, support load, risk reduction). I offered a smaller initial package tied to a measurable outcome.

Result: We got agreement to a pilot instead of losing the deal. Objections often mean "I do not see the ROI yet."

18. Marketing: "Tell me about a campaign that didn't perform."

What they're testing: iteration, data-driven thinking

Situation: A paid campaign had strong clicks but weak conversions.

Task: I needed to diagnose the funnel and improve conversion without increasing spend.

Action: I audited landing page load time and message match, then ran an A/B test on the headline and CTA. I also tightened targeting to reduce low-intent traffic.

Result: Conversion improved and cost per qualified lead dropped. Traffic metrics are not success metrics.

19. Product: "Tell me about prioritizing features."

What they're testing: prioritization, stakeholder alignment

Situation: Engineering capacity was tight, and three teams wanted their feature "now."

Task: I had to recommend a priority order that the org could accept.

Action: I created a lightweight scoring model (customer impact, revenue risk, effort, strategic alignment). I shared the scores transparently, got input from stakeholders, and aligned on a phased roadmap.

Result: We reduced conflict because decisions felt principled, not political. Transparency beats persuasion when everyone is stressed.

20. Engineering: "Tell me about debugging a hard problem."

What they're testing: systematic thinking, persistence

Situation: We had intermittent timeouts that only happened under peak load.

Task: I needed to identify root cause and prevent recurrence.

Action:

→ Reproduced the issue using load tests

→ Added tracing to isolate latency spikes

→ Found a dependency call that degraded under specific concurrency

→ Implemented caching and added a circuit breaker

Result: Timeouts dropped and performance stabilized under load. Hard bugs become solvable when you can make them predictable.

21. Data: "Tell me about using data to influence a decision."

What they're testing: analytical reasoning, persuasion

Situation: Leadership debated whether to expand a feature or fix reliability issues first.

Task: I needed to provide evidence that clarified the tradeoff.

Action: I analyzed support tickets, churn reasons, and usage data, and showed that reliability issues affected a small group but drove disproportionate churn risk. I proposed a two-week reliability sprint with a measurable target.

Result: We prioritized reliability, churn stabilized, and future feature adoption improved. Data is persuasive when tied to business outcomes.

22. Operations: "Tell me about a logistics disruption."

What they're testing: calm execution, contingency planning

Situation: A supplier delay threatened a customer delivery schedule.

Task: I needed to protect delivery dates while minimizing extra cost.

Action: I identified alternative suppliers, renegotiated partial shipments, and updated customers proactively with options. I also created a buffer-stock rule for future peak periods.

Result: Most deliveries stayed on time and we reduced future disruptions. Ops is risk management with relationships.

23. Finance: "Tell me about finding an error in financial data."

What they're testing: attention to detail, integrity, problem solving

Situation: A monthly close report showed a variance that didn't match operational reality.

Task: I had to find the cause before leadership made decisions on bad data.

Action: I traced the variance to a classification change and reconciled transactions across two systems. Then I updated the mapping rule and documented the change for the team.

Result: The report became consistent and close went smoother the next month. Small data definition changes can cause big business confusion.

24. HR: "Tell me about handling a sensitive situation."

What they're testing: discretion, fairness, communication

Situation: Two team members escalated a conflict that was affecting morale.

Task: I needed to de-escalate and restore working norms without taking sides.

Action: I spoke to each person separately to understand facts and impact, then facilitated a structured conversation with clear rules (specific behaviors, expected changes, follow-up checkpoints).

Result: Collaboration improved and the team moved forward. Fairness is about process as much as outcome.

25. Healthcare: "Tell me about a high-stress situation."

What they're testing: prioritization, composure, protocol thinking

Situation: During a busy shift, two urgent needs arrived at once with limited staff.

Task: I had to prioritize safely and coordinate help.

Action: I followed triage protocol, delegated tasks based on skill, and communicated quickly and calmly. I documented actions clearly to keep continuity.

Result: We maintained safe care and reduced confusion. Under stress, protocols exist to protect good judgment.

26. Entry-Level: "Tell me about leading a project."

What they're testing: leadership potential, coordination

Situation: In a university group project, the team had no plan and deadlines were slipping.

Task: I needed to organize the work and get us to submission quality.

Action:

① Broke the project into tasks with assigned owners

② Set weekly checkpoints

③ Created a shared doc so everyone worked from the same source

④ Raised an early issue when a section was off-track and helped rewrite it with the teammate

Result: We submitted on time and received strong feedback. Leadership starts with clarity and follow-through, not titles.

27. Remote Work: "Tell me about working effectively remotely."

What they're testing: async communication, independence

Situation: Our team was distributed across time zones, and decisions kept getting lost in chats.

Task: I needed to improve coordination without adding meetings.

Action: I introduced decision logs (one short post per decision with context, options, final call). I also used short video updates for complex topics and set response-time expectations.

Result: Fewer misunderstandings, faster execution, and less repeated discussion. Remote work rewards written clarity.

28. Change: "Tell me about adapting to a big change."

What they're testing: resilience, flexibility

Situation: Mid-quarter, leadership changed priorities, and our project was paused.

Task: I needed to shift quickly without wasting work.

Action: I summarized what we had learned so far, identified reusable components, and proposed a smaller deliverable that still created value under the new priority. I helped the team re-plan with minimal thrash.

Result: We avoided sunk-cost spirals and shipped something useful anyway. Adaptability is turning change into a smaller, winnable plan.

29. Ethics: "Tell me about choosing between easy and right."

What they're testing: integrity, courage, judgment

Situation: A teammate suggested presenting a metric in a way that made results look better but was misleading.

Task: I needed to protect trust and decision quality.

Action: I raised the concern privately, explained how it could mislead leadership, and proposed a transparent framing (show both metrics and clarify tradeoffs). If they refused, I was prepared to escalate.

Result: We presented the honest version, and leadership made a better decision. Trust is hard to win and easy to lose.

30. "Why should we hire you?" (using STAR)

What they're testing: relevance, self-awareness, ability to connect story to role

Situation: In my last role, I inherited a messy workflow where tasks slipped because ownership was unclear.

Task: I needed to create a system that improved execution without creating bureaucracy.

Action: I defined ownership rules, introduced a simple weekly planning ritual, and created a visible tracker so blockers surfaced early. I coached the team on how to use it, not just what it was.

Result: Delivery became predictable and stakeholders regained confidence. That's why I'm a fit here: this role needs someone who can bring clarity, align people, and execute.

How to Ace One-Way Video Interviews with STAR

One-way video interviews often use predetermined questions and require clear, structured answers. Video interview platforms stress that video interviews reduce opportunities to clarify misunderstandings, so you need clear, concise, structured responses.

Practical implication: write your STAR answers so they still make sense without follow-up questions.

Common STAR Mistakes and How to Fix Them

Mistake 1: Your "Situation" becomes a novel

Fix: If the situation needs more than 2 sentences, you are not compressing. Cut characters, keep constraints.

Mistake 2: Your actions are vague

Bad: "I communicated with stakeholders."

Good: "I scheduled a 15-minute alignment call, wrote a one-page decision doc, and set weekly checkpoints with owners."

Mistake 3: No result, or a result with no proof

Fix: Add one of these:

• Metric (time, cost, conversion, error rate, cycle time)

• Before/after comparison

• External validation (customer feedback, leadership decision, adoption)

Mistake 4: You talk in "we" the entire time

Fix: You can say "we" in Situation and Result. In Action, say I.

Mistake 5: You never show learning

Fix: Add one line: "Since then, I..."

7-Day STAR Practice Plan Using AIApply

If you want a system instead of random practice, follow this:

Day 1: Build your story bank

Write 10 story titles using the template above.

Day 2: Match stories to the job description

Look for repeated words in the job ad (ownership, stakeholder, ambiguity, customer, pace). Map each to a story.

Day 3: Write 60-second versions

Use the 4-sentence skeleton.

Day 4: Write 120-second versions

Add one more Action detail and one more Result proof point.

Day 5: Drill under time pressure

Record yourself answering 10 prompts with a timer.

Day 6: Simulate the interview

AIApply's Mock Interview Simulator is designed for practicing upcoming interview questions. The AI gives you feedback on your answers, which helps you refine your STAR stories before the real thing.

Day 7: Prepare final-round "judgment" stories

If you want live help during interviews, AIApply's Interview Buddy provides real-time coaching and answers. Use this responsibly: structure and recall support are fair game; inventing facts is not.

Interview Buddy listens to questions in real-time through your browser and suggests structured answers based on your resume and the job description. It's like having an interview coach in your ear, helping you recall your best stories when nerves might make you blank.

Frequently Asked Questions About STAR Method

Q: Can I use the same STAR story for multiple questions?

Yes, absolutely. A well-crafted story about handling a project crisis could work for questions about "significant challenges," "tight deadlines," or "working under pressure." Just adjust which aspects you emphasize. That said, having 8 to 10 distinct stories gives you more flexibility and shows breadth of experience.

Q: What if I don't have work experience for a question?

Use examples from academic projects, volunteer work, sports teams, or personal projects. The key is demonstrating the skill or behavior being tested. Just preface it with context: "I'll draw from my university experience for this one, since it demonstrates..." Employers care about the behavior more than where it happened.

Q: How do I handle questions about failures without looking bad?

Focus on three things: what you learned, what you changed afterward, and (if relevant) a later success that proves you applied the lesson. Everyone fails. What matters is accountability and growth. Frame the result as "we didn't hit the goal, but I learned X, which I applied to Y project where we succeeded."

Q: Should I memorize my STAR answers word-for-word?

No. Memorized answers sound robotic and you'll stumble if the wording throws you off. Instead, know your story structure cold (the situation, your specific actions, the outcome), and practice telling it naturally multiple times. You want to hit the key points consistently, but with natural language.

Q: What if the interviewer interrupts my STAR answer?

That's actually common, especially if they want to dig deeper into something you said. Don't panic. Answer their question, then offer to continue if they want more detail. Interruptions can actually be good: they show engagement. Just make sure you've at least mentioned the Result before they cut you off, so they know the story had a positive outcome.

Q: How technical should I get in my STAR answers?

Match your audience. If you're talking to a technical manager, you can use some jargon (but still explain the impact). If you're talking to HR or a non-technical executive, keep it high-level and focus on business outcomes. When in doubt, lead with impact and offer to go deeper if they want details.

Q: Can I use STAR for questions that aren't "Tell me about a time..."?

You can adapt it. If they ask "How do you handle conflict?" you could say "Let me give you a specific example" and launch into a STAR story. Real examples are always stronger than theoretical answers. If they specifically want theoretical advice, give it, but try to ground it in a quick story if possible.

Q: What if I get a question I haven't prepared for?

Take a breath and think for 5 to 10 seconds. It's better to pause than to ramble. Try to map the question to one of your prepared stories (what skill are they really testing?). If you genuinely have no relevant experience, acknowledge it honestly and either share a related example or explain how you'd approach the situation based on principles you follow.

Q: How do I practice STAR if I don't have anyone to do mock interviews with?

Use AIApply's Mock Interview Simulator. It asks common behavioral questions and evaluates your answers using AI. You can also record yourself on your phone, watch it back, and critique yourself. Write down your answers, read them out loud, time yourself. Repetition builds confidence.

Q: Should I bring notes to the interview with my STAR stories?

For phone or video interviews, having brief bullet points nearby can help if you blank (just don't read them verbatim). For in-person interviews, you usually can't reference notes while answering. That's why practice is crucial. You want the stories so familiar that you can tell them naturally under pressure.

Q: What if the result of my story wasn't entirely positive?

Be honest about mixed results, but emphasize what you learned and what improved. For example: "We didn't hit the original timeline, but we delivered a higher-quality product and the client gave us a 5-star review. I learned that sometimes the right tradeoff is time for quality, and I've used that judgment on three projects since."

Q: How many STAR examples should I prepare?

Aim for 8 to 10 solid stories that cover different competencies (leadership, teamwork, problem-solving, failure, initiative, conflict, customer focus, adaptability, etc.). This gives you enough variety to handle most behavioral questions without repeating yourself too much.

Q: Can AIApply help with more than just practicing?

Yes. Beyond the Mock Interview Simulator, we offer the Resume Builder to ensure your resume highlights experiences that make great STAR stories, the AI Cover Letter Generator to connect your experience to specific roles, and Auto Apply to get you more interview opportunities to practice your STAR skills. It's a complete system from application to offer.

The Resume Builder specifically helps you identify which experiences from your background will translate into strong STAR stories. It analyzes your work history and suggests which achievements to emphasize based on the skills interviewers care about most.

Q: Is STAR still relevant in 2026?

Absolutely. If anything, it's more important. With AI screening earlier parts of the hiring process, the human interview is where you differentiate yourself. STAR helps you tell memorable, evidence-based stories that prove you can do the job. Companies aren't moving away from behavioral interviews; they're getting more sophisticated about evaluating them.

Don't miss out on

your next opportunity.

Create and send applications in seconds, not hours.

.webp)

.webp)

.webp)